System Design 14 - YouTube

Show your videos to the world

Introduction

So, you want to allow your product to allow video upload, right? Well, it’s time we looked into it.

While this part talks about designing YouTube, we can also define it “Allow uploading and viewing stored videos”. So, it also applies to Netflix, Hulu, Disney and any other video sharing platform.

So, without further ado, let’s jump right into it!

Requirements

- Allow uploading and watching a video

- Mobile apps, web browsers, smart TVs

- 5 million daily active users

- Average of 30 minutes spent viewing per day per user

- Support international users

- Support multiple video resolutions and formats

- File requirements are maximum 1GB video

Back of the Envelope

- 5 million users

- 5 % upload 1 video daily

- Assume average video size is 30 % of maximum -> 300 MB

- Daily storage required ~= 5 million 0.05 300MB ~= 250 000 * 300 MB ~= 75 000 000 MB ~= 75TB

We need 75 TB of storage daily. Furthermore, we very likely want to cache the videos. Content Delivery Network is great, but for videos, it’s a little more complicated. We can take a look at Amazon Cloudfront CDN Pricing to have an idea.

With 75TB daily storage required, we can estimate that our CDN would need to support 2250 TB, or 2.25PB monthly. After a couple months, we’d probably have even more. If we would take into account more than 4 PB monthly, the price per GB is 0.020 in US.

- 5 million users 5 videos per day average video size 300MB

- 25 million * 300 = 7500 million MB ~= 7.5 million GB

- 7.5 million GB 0.02 ~= 750 000 0.2 ~= 150000 USD per day for CDN

High Level Design

Now, let’s hop into high level design. So, let’s consider YouTube. What’s happening over there?

- You upload a video

- YouTube starts processing the video

- You can always change the metadata of the video

- Once video is completely processed, users can watch it

So, we basically have 3 different user flows:

- Upload a video

- Update video metadata

- Watch a video

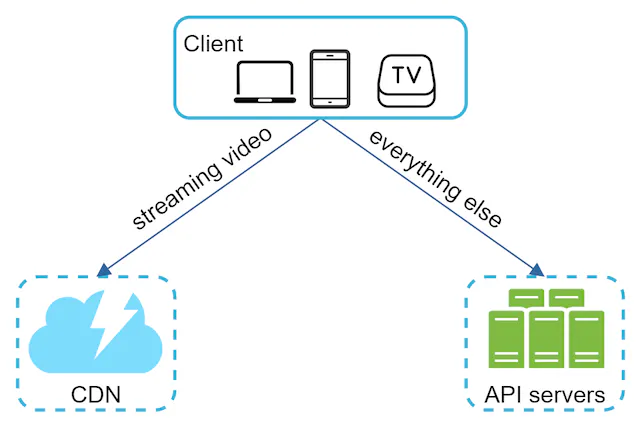

In the very high level, it’ll look something like this:

- Watching a video will be streaming videos from CDN

- All other updates are handled by API servers

Upload a video

So, what do we actually need to care about when we’re uploading a video? Well, if you’ve ever tried to create your own video, you probably have an idea:

- Videos are LARGE. For example, my iPhone creates massive videos by default with the quality camera

- However, to make it sharable to someone and not take ages, I can “downscale” the quality by changing some properties

- In essence, we need to:

- Store the videos

- Perform some encoding to make it workable

- Once it is done, perform some final tasks

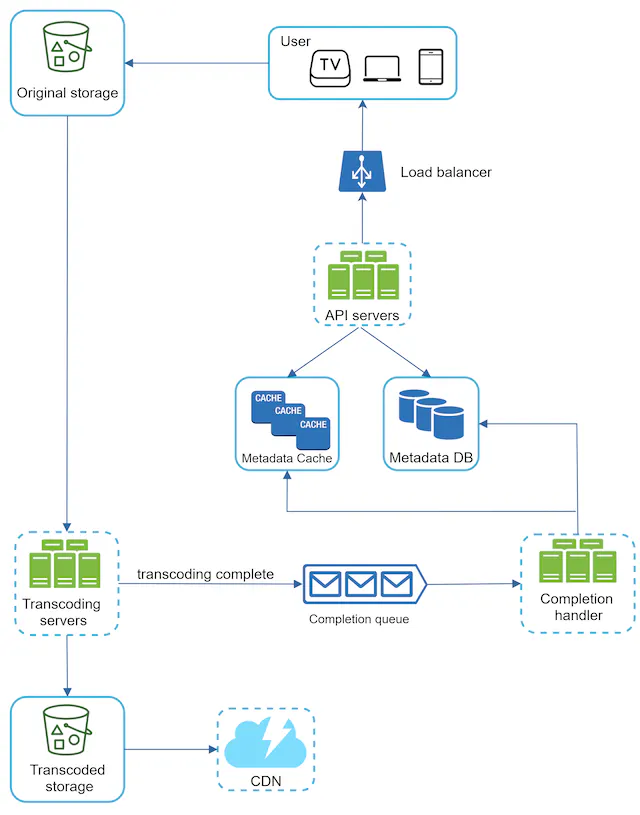

So, uploading a video can look something like this:

- A user selects a video from his own device

- He then uploads it to our API servers

- API servers contain metadata storage and cache for already uploaded videos

- If video is being uploaded, the metadata are stored

- Video is uploaded to a so-called “Original Storage”, which is basically our storage for unprocessed videos

- Once the video is processed, it’s removed from the storage

- Now, we need to understand how it’s stored. I’ll talk about it later, but essentially, videos are stored as Binary Large Objects, or Blobs for short.

- Once a video is uploaded, then Video Encoding (or transcoding) happens.

- After video is encoded, it’s saved into a storage and some completion tasks are done

- Completion tasks can be updating the metadata, or sending notification to users

- We also want to push the videos to CDN for faster delivery when watched

Now, that’s quite fast! But don’t worry, we’ll have to deep dive on video coding later on. That’s probably the most complicated part.

Update a video

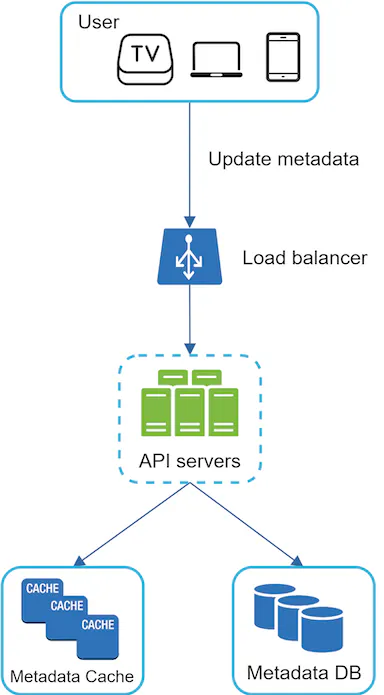

Now, when a user uploaded a video and it’s either completed or still in progress, we may want to update the description or some other metadata. In essence, this part is just updating some metadata storage. A potential solution is shown below.

Watch a video

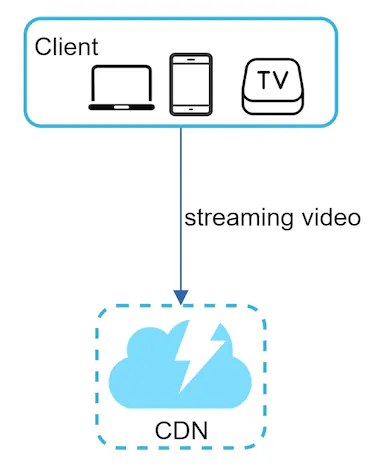

Finally, when a video is being viewed, we want to share it from CDN. See the image below to have an idea how it works.

Now, it’s not really just that simple. There are some parts wer need to understand:

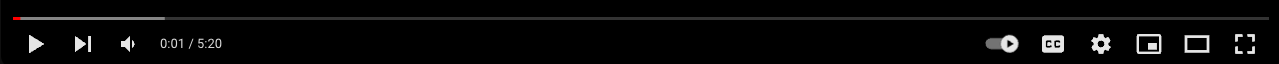

- When we open youtube, we can see the length of the video

- We can also see that some parts are black in the video navigator, see image below

- The red part is already played

- The gray part is “ready to play”

- The black part is “not ready to play”

Now, what it means is basically that the red and gray parts (played, ready to play) are already downloaded to device (in this case browser).

But that’s interesting! Because we can’t just send the whole video. There are a lot of details I don’t know either, but to have an idea, the protocols you can use are:

Blob

I’ve mentioned before that videos are stored as Blobs. But what is a blob? From Wikipedia:

A binary large object (BLOB or blob) is a collection of binary data stored as a single entity. Blobs are typically images, audio or other multimedia objects…

Now that’s helpful. Except it’s not, at least for me. So, I’ve dug around and found that some examples can be:

- Video (MP4, MOV)

- Images (JPG, PNG, PDF)

- Audio (MP3)

- Graphics (GIF)

For more info about blobs, see Tokenex article

Finally, there are some storage types, such as Azure Blob Storage. The description may give us some more ideas:

Blob Storage is designed for:

- Serving images or documents directly to a browser.

- Storing files for distributed access.

- Streaming video and audio.

So, in short, blobs are multiple file types that we want to store and serve later on.

Deep Dive

Now, that’s a really good start I feel. We have an idea of how to upload, update and view a video! So, let’s get deeper.

In this part, I’d like to focus on the following parts:

- Video transcoding

- System optimization - performance & costs

Now, if we’d take a look at YouTube, we can see a lot more things:

- Autocomplete

- Notifications after video is done processing

- Rate limiting

I won’t cover these here because it’d blow up A LOT. But, we could also have these services. If need refreshing, take a look at previous articles that covered this:

Video transcoding

Video encoding is probably the most complicated and time consuming task in this chapter, so bear with me while I try to make it more approachable

So, where do we start? Well, I’ve decribed it already, but let’s take a look once again at what video encoding is, or why do we need it:

- Raw video consumes a lot of spaces. AN hour long HD video can easily consume hundreds of GBs of space.

- To quote MDN Web Docs:

- A minute of HD video would need 14.93GB storage

- 2 hour video movie would take 1790 GB of storage

- Uncompressed videos are simply not possible store it. And sharing over network is a completely different world.

- Browsers or devices can support only a small subset of all formats. See the above documentation for supported media types. The most common are MP4, OGG or WebM

- We may want to store multiple types of videos. For example, if users have bad network, we’d like to send 144p video, while those with good internet might want HD videos.

Now, encoding formats often have 2 parts:

- Container

- Basically contains video file, audio and metadata

- Can be seen from the extension

- Common containers are .avi, .mov or .mp4. Note that for example .avi is not supported by most browsers.

- Codecs

- Compression and decompression algorithms to reduce video size while preserving quality

- Common ones are H.264, VP9 or HEVC

So, the base line is - We need to do a lot of stuff! So where do we start?

Directed Acyclic Graph

Now the above is just a fancy word. Kind of. Directed Acyclic Graph, or DAG for short, is an important concept in graph theory and computer science. To avoid the mathematics stuff, the baseline is:

- It’s a directed graph, meaning that it’s comprised of nodes and edges, where a node can be accessed from previous node only

- Doesn’t form closed loop, meaning that tasks can’t repeat themselves

That’s a lot of fancy wording again. How will we use it here? Well, it’s basically a tool with which we can describe the system in a very solid way. It shows that some parts must be completed before another ones, and are good for describing relationships between data models.

It also shows which tasks must be executed sequentially and which can be parallelized. For example, we can start processing video, audio and metadata simultaneously, but we can’t work with the video until all have been completed.

Consider the following DAG for video encoding:

With this, we can immediately see that:

- The original video can be done by 3 separate services

- One for video tasks

- One for audio tasks

- One for handling metadata

- Only when all the individual parts have been finished, we can assemble the video back

So, let’s take a look at it deeper, because this is a flow we’ll use!

- When the original video is stored, we need to reduce the size of it

- We’ll simultaneously perform 3 different types of operations

- We’ll retrieve metadata from the video

- To reduce the audio size, we’ll perform audio encoding

- To reduce video size, we’ll perform video encoding

- However, we may want to do more stuff with the video:

- Inspection -> Making sure the video can be processed by our system and is not malformed. If we’d process that, we’d waste computational resources

- Thumbnail -> Users can provide a thumbnail for a video. If there is not one, we might want to get one from the video

- Watermark -> Users may want to make sure that the video is theirs and can’t be stolen. We can add watermarks to it

- Any other operation we might deem fit

- When all the operations have been finished, we have individual smaller video parts that we can assemble together

Now, one thing to note is what I’ve already mentioned - multiple qualities. It is probable that we’d have to have multiple video encodings stored.

Consider that a user uploads his video of 5 mins in original size of 10 GB.

- We’ll encode this video to 4k, meaning 750 MB per minute, meaning 3.75 GB

- We then return this video to a user with bad internet. He’d never be able to watch this video

- So, we also encode this video in 144p, meaning 1.3MB per minute, a total of 6.5 MB

- User can easily watch this video

For the video rates described above, I’ve used TechAdvisor article

So, the final output of this will likely be something like:

- User uploads a video in his own format. The video will be e.g.

bunny.mp4 - We’ll process this video and make multiple encodings of it:

bunny4_144p.mp4bunny4_240p.mp4bunny4_360p.mp4bunny4_480p.mp4bunny4_720p.mp4bunny4_1080p.mp4

- Finally, we’ll return the video for specific users based on their preferences. By default, we can try to set them based on their network speed.

So, now that we have a general idea, we can continue into architecture of this video transcoding process!

Video Transcoding Architecture

This is the architecture we’re gonna use. Now, look at the picture and try to assign the parts to those we’ve discussed!

- Storage - We need a temporary storage which we use for each individual video. Once it’s been processed, we can clear it as we have a transcoded storage at the end

- Preprocessor - We’ll need to preprocess a video so that we can perform the tasks on it

- DAG Scheduler - We’ll schedule individual tasks depending on the current state of video processing

- Resource Manager - We’ll want to use only available resources and not overflow some parts while others are empty

- Task workers - Individual tasks can be performed in parallel. We’ll have separate workers using temporary storage for that

- Finally, the output will be encoded video (or videos).

So, let’s look at the individual parts!

With Temporary Storage, we may want to have multiple depending on types. For example, metadata can be easily kept in memory, while video and audio would likely need a blob storage.

In Preprocessing, we’ll do a very interesting task. We’ll split the video into different parts.

- Imagine you have an hour long video

- If you split it into 60 minute parts, you can parallelize all individual minutes

- If you keep track of their position, you can then put it back

So, what we’ll do is split the video into Group of Pictures. By doing so, we can speed it up

- Based on the split, we’ll create a DAG config.

- If we have 1 minute video split into 1 minute chunks, it’s the same, therefore GOP won’t help us

- Different videos might have different GOP sizes, so we’ll create a config to for later that will be used in order to process the video parts

Finally, we’ll store all these items in temporary storage. In case one part breaks, we can resume from last failing point.

DAG Scheduler will basically define the graph in individiual tasks. For example, we may have 4 different tasks:

- Video Encoding

- Audio Encoding

- Thumbnail Generation

- Watermark addition

This scheduler basically splits these tasks into stages to make it clear what parts need to be done by individual tasks

Resource Manager manages resource allocation. How surprising!

Basically, we can have multiple queues here:

- Running queue - workers and tasks being processed and their status.

- Worker queue - Queue of workers and their utilization.

- Task queue - Priority queue of tasks to be performed

- Task scheduler - Basically coordinator in this part. Retrieves high prio tasks and optimal workers, and puts task into running queue.

Once the task scheduler defines a task and worker to be processed, it’s forwarded to the task workers.

Task Workers are basically individual services performing the task. We can perform audio and video encoding simultaneously, just on different servers.

Finally, the output will be Encoded Video that can be in multiple formats.

System Optimizations

Now that we know how we will process the individual videos, let’s perform some optimizations! Let’s start with video uploading!

- We’ve already mentioned GOPs to make video processing faster. However, we may be able to do this directly on client.

- So, instead of uploading an hour long video, we’ll upload 60 1-minute chunks to the original storage in the first place (as we’d be splitting it ourselves later on).

Another thing we can do is storing upload centres close to users. If users would have to upload videos from Europe to US, it’d take very long time. So, we’ll use CDN as upload centres as well.

Now, let’s look at the complete thing we’ve designed above:

With this design, we have multiple modules:

- Once data is stored, download it for processing

- Once it’s downloaded, process it

- Once it’s processed, upload it to storage

- Once it’s stored, push to CDN

Now, there are some problems with this. Specifically:

- If there’s a spike, there’s a chance we’d download a lot of videos simultaneously

- The processing would have to wait until all videos are downloaded

- The uploading would wait for all videos to be processed

So, we’ll introduce a message queue!

By adding message queue between individual modules, we allowed for more decoupled system

- Instead of waiting for download, we’ll be watching a queue

- If there’s anything in the queue, we’ll start processing it

- It leads to more even load and better performance

Security

Now, one of the things we want to make sure is only logged in users are allowed to upload a video. We often do this with authorization tokens.

However, in the case of our storage, we probably may be using something like Azure Blob Storage mentioned before. But that’d mean the user would have to upload to video to our servers, and then we’d send it somewhere next.

A thing we can use is presigned URL on AWS, or shared access signature on Azure.

They are pretty much the same thing - a temporary link directly to the storage. By doing so, a user would first request the temporary link, and then upload it to the storage directly.

Similarly, video protection can be done - we can encrypt the videos on our server so they can’t be read by unauthorized users. Furthermore, we can add watermarks of our company logo, or add digital rights management system (DRM) such as PlayReady for dealing with copyrighted material.

Cost Saving & Error Handling

Finally, 2 parts to consider are cost savings. As mentioned on the start, CDN costs are 150k USD daily. That’s a lot to deal with every day.

What we can do is not push all videos to CDN. We could only store the most popular videos, or those with high view count. Low popularity videos will likely force us to spend more money than to gain in revenue. So, we can apply some analytics here and decide which videos we’d store in CDN.

If it’s necessary because our product is too popular, we could build our own CDN. Netflix already did that with internet service providers. See OpenConnect for more information.

Error handling is a really important topic here. We have a lot of parts in our system. We need to make sure we can recover gracefully.

- Identify recoverable and non-recoverable errors

- Recoverable errors can be retried

- Non-recoverable errors require message to the user

- Retry on recoverable errors such as video processing or file upload

- Limit retries on recoverable errors

- If Video can’t be split on client, create GOPs on server instead

- Process requests on different servers if need be

- Process DB operations on different DBs if they are down

- And much more

Summary

So, we’ve gone through designing a video streaming service!

To recap a little, let’s see what we’ve done!

- We’ve created a high level design

- Video streaming is done by CDN for popular videos

- Video upload is done on our end entirely with only processed videos pushed to CDN

- We’ve came up with many tasks for processing videos

- Original Storage -> Processing -> Encoded video storage

- We’ve applied parallelization where possible

- Video split into smaller chunks

- Individual chunks processed by themselves

- Encoding video and audio separately

- Performing specific video operations separately

Now, there’s much more to it, and I don’t know how to fix everything, but potential thoughts can be:

- Live streaming videos

- High latency requirement

- Less parallelization due to smaller chunks being processed real time

- Different error handling as it needs to be faster (no retries)

- Copyright Violations

- Database scaling, such as replication or sharding

References