System Design 13 - Autocomplete

How google suggests search results

Introduction

So, you want to be like google, eh? Well, you’ve come to the right place.

We’ve already built a web crawler. We understand now how to do that. However, would would we do autocomplete and allow searching over the web? Well, let’s take a looks

Algorithms & Data Structures

This is again one of the questions where we need a deeper understanding of algorithms. Or, actually, data structures.

Why? Well, imagine the following:

- You have 100 millions users

- User types in a query and gets a response

- You have saved previously searched queries

Now, how would you search for them? People can look for a lot of stuff, sometimes weird stuff. Try getting all words starting with “muy” in every google search ever searched.

Luckily, there IS a data structure to help us. It’s a tree like structure. Let’s TRIE IT OUT (haha, I’ll see myself out).

Trie

So, the data structure we’ll use is called a Trie. So, how does it work?

Well, let’s quickly say something about trees in Computer Science, because they are unnatural - they are basically trees upside down:

- They are root at the top

- They have branches - groups of nodes. Each branch can have its own branch.

- Imagine a tree with root and 2 nodes. There are 2 branches.

- If both nodes have 2 underlying nodes, there are 2 “global” branches and 2 “local” branches for each -> total of 4 “local” branches

- The outermost nodes are called “leafs”. Those are the nodes at the bottom.

Now, how does this help us? Well, tries are a specific kind of trees

- The root node is empty

- There are 26 children of root node - one for each alphabet letter

- Every subsequent nodes contain additional characters

So, consider the following trie:

{

root: {

t: {

to: {}

te: {

tea: {}

ted: {}

}

}

a: {}

i: {

in: {}

}

}

}Now, it looks really funky in the JSON above. But upon closer inspection, we can actually see it’s an autocomplete!

- There is a “t” child

- There is an “a” child

- There is an “i” child

Furthermore, we know that:

- There are 4 words in “t” - “to”, “te”, “tea”, and “ted”

- There are 0 words in “a”

- There is 1 word in “i” - “in”

Now, we could use this as autocomplete. This is a very basic example, but if a user types “t”, we don’t need to search entire database! We would just save all items in a Trie in the first place, and then whenever a user types “t”, we could immediately return 4 words rather than iterating over all!

Expanding upon trie

Now, let’s take it a little further. We can now see why tries are good for autocomplete. Imagine there are billions of records in there. You’d quickly filter out most.

In fact, you’d get to your requested result in a short time! All you have to do is find the node containing your text!

Consider the following:

- There are 6 words - “adam”, “eve”, “thomas”, “apple”, “anger”, “eloquent”

- User types in “t”

- If you’d have to fetch them all from DB, you’d iterate over 6 items

- With trie, you’d find the single occurence in 1 step - just go into the “t” branch

So, it’s also very fast! But, we also need to save these somewhere. You could either use key-value store (because it’s just JSON), or you could use document store like MongoDB.

Great, now we have it also stored! But we still have an issue. How would we count the number of times something was searched? After all, autocomplete often suggests most searched requests.

Well, let’s take a look back to the original example:

{

root: {

t: {

to: {}

te: {

tea: {}

ted: {}

}

}

a: {}

i: {

in: {}

},

}

}It’s just an object! So, we can also add some more values to it! We could just add another “node” to the root. But this time,

it would be just a property. For example, we could add mostSearched: ['tea', 'ted', 'in'].

By this simple property, we could show right away what are the most searched. But we can do better! We can do this on every node!

Or even better! We can store the count of how many times it was searched! Consider the following:

{

root: {

mostSearched: [{tea: 20}, {ted: 10}, {in: 5}]

t: {

mostSearched: [{tea: 20}, {ted: 10}, {to: 2}]

to: {}

te: {

tea: {}

ted: {}

}

}

a: {}

i: {

in: {}

},

}

}With the above example, when a user focuses the input, we can immediately show him the most searched items

- If he types in

t, we can immediately show him most searched items starting witht - If he searches for

to, we could then update the counter to3 - If he would search for

tomany times, we would propagate it tomostSearchedin parent nodes to adjust for the popularity

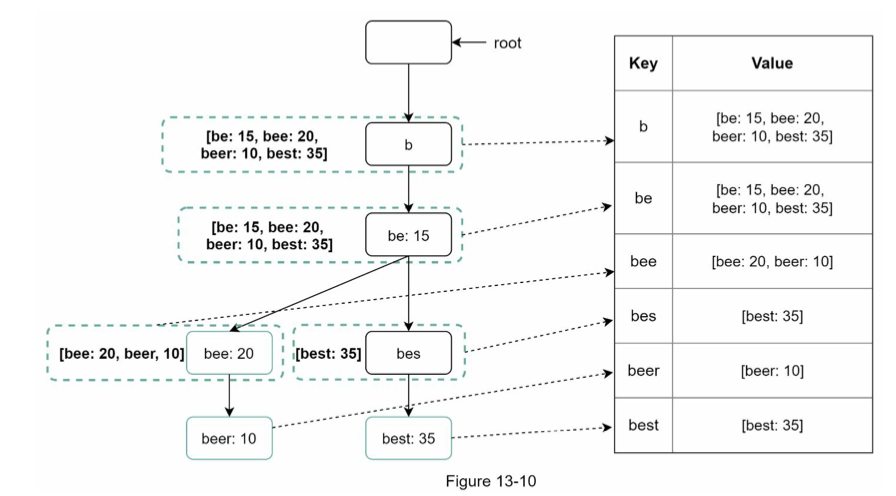

Therefore, a Trie is a great tool for this! It’d finally in DB look something like this:

Designing autocomplete

So, we now know how to store the data efficiently for autocomplete. Let’s try to come up with a design. Again, let’s consider the requirements!

- 10 millions DAU

- all searches are lowercase

- search queries in english

- returns top 5 by popularity

- Only beginning of the query is taken into account

So, in short:

- sorted by popularity

- high traffic

- high scalability

- relevant to the search term

Back of the envelope

- 10 millions DAU

- Assume average 10 searches per day

- Assume 2 words

- Assume word ~= 5 characters

- Assume ASCII characters

- 2 5 1 ~= 10 bytes

Furthermore, let’s get the QPS:

- Each character typed in results in a query

mysearch.com?q=d->mysearch.com?q=di->mysearch.com?q=din->mysearch.com?q=dine - That’s 10 characters per search

- 10 characters per search * 10 searches per day ~= 100 queries

- 10 millions DAU * 100 ~= 1000 millions ~= 1 billion queries per day

- 1 billion / 24 / 60 / 60 ~= 1 billion / 88 000 ~= 1 000 000 000 / 100 000 ~= 10000 QPS

- Peak QPS ~= 2 * QPS ~= 20000

- Assume 5 % of queries are new ~= 1 billion * 0.05 ~= 50 millions ~= 50MB added daily

Design

So, as mentioned above, if the dataset would be very small, we could easily just do this on a regular relational database with:

SELECT * FROM myTable

WHERE query LIKE '{prefix}%'

ORDER BY frequency DESC

LIMIT 5But, it’d be way too easy and not scalable. Soo, let’s do it the hard way!

So, we already know how we are going to store the data. It’s gonna be in a Trie. We’ll also cache the data in there. We’ve already done it - we will keep the top searches on the individual nodes.

Now, we could make a decision here:

- Is it searches across all time, OR

- Searches across N hours back

If the latter, we’d have to set up some cache control. Otherwise, we could just keep them all the time and override it.

The problematic part here is analytics. Because we don’t want our search to be slowed down when analytics are broken.

Imagine the following flow:

- 20k searches happen in one moment

- All of them perform write operations

- All of them retrieve data afterwards

Now, that can be fairly slow, especially with the writes. So, what we could do is separate the services. We’d have:

- A query service that handles requests and just reads data

- An analytics service that performs analytics and builds the trie

And that’s pretty much the design!

- A user enters a web app and starts searching

- A request goes through load balancer to a server

- The request then retrieves the most searched items from query service, specifically Trie Cache

In the background, an analytics service is also doing its job:

- Analytics service goes through logs and passes them to aggregation services

- These services retrieve aggregated data, which are then passed to workers (servers performing asynchronous trie building)

- Workers perform weekly updates on Trie DB

- The latest weekly snapshot is loaded into Trie Cache, allowing for fast search

- The aggregated data would be aggregated by search term, frequency and date.

So, in the end, we’d have:

- Query service behind a load balancer fetching data from cache

- Aggregate service populating the cache and doing the heavy lifting in the background

Furthermore, when the request is back in the browser, we could cache the most popular searches in local storage. That’d save some server time as well and make it faster for the user.

Finally, we could want to have some blacklisted suggestions with sexual or violent content. In that case, we’d still store them in the Trie, but we would simply filter them out after retrieving them.

For the storage - we can have a lot of data to store. So, how’d we scale that? Well, we could create multiple DBs per starting letter (a-m, n-z), or even 1 for each letter (a, b, c, …, z). While it sounds reasonable, we’d quickly find out that some letters are more common than others - quickly try to think about a word starting with X and starting with E.

But, we could do some analytics around it and find the most suitable sharding approach, e.g.

- one server for words starting with

ea-em - one for words starting with

en-ez - one for words starting with

u-z

Summary

So, we’ve created an autocomplete. We’ve discussed Trie a lot, and this entire design is basically around that and potential analytics of storage approach.

Of course, we might need to support additional things. If we’d like to support multiple languages, we might need to support unicode (which is double in size). We could create multiple tries per country as well. There are a lot of ideas.

Additionally, we could support trending searches - e.g. a news event broke out, such as war in Ukraine. For this case, our system is not ready as we do not support real time updates. We’d have to be updating existing tries in the cache and add weights based on searching within short time period (e.g. show “War in Ukraine” before “tree” even though “tree” was searched for 100 times in the last 7 days, while “War in Ukraine” was searched 50 times, but in last hour)

References