System Design 2 - Tools

Second part of my system design train of thought

Introduction

In the last part, I’ve established why system design is important and why it’s not an exam, but rather a brainstorming. Furthermore, I’ve noted that there is no correct answer to system design questions without getting more context from the interviewee.

Before getting to specific tasks and how to approach them, I’d first like to establish what tools we have at our disposal. All images in this part are taken in some shape or form from the book I’ve added in references.

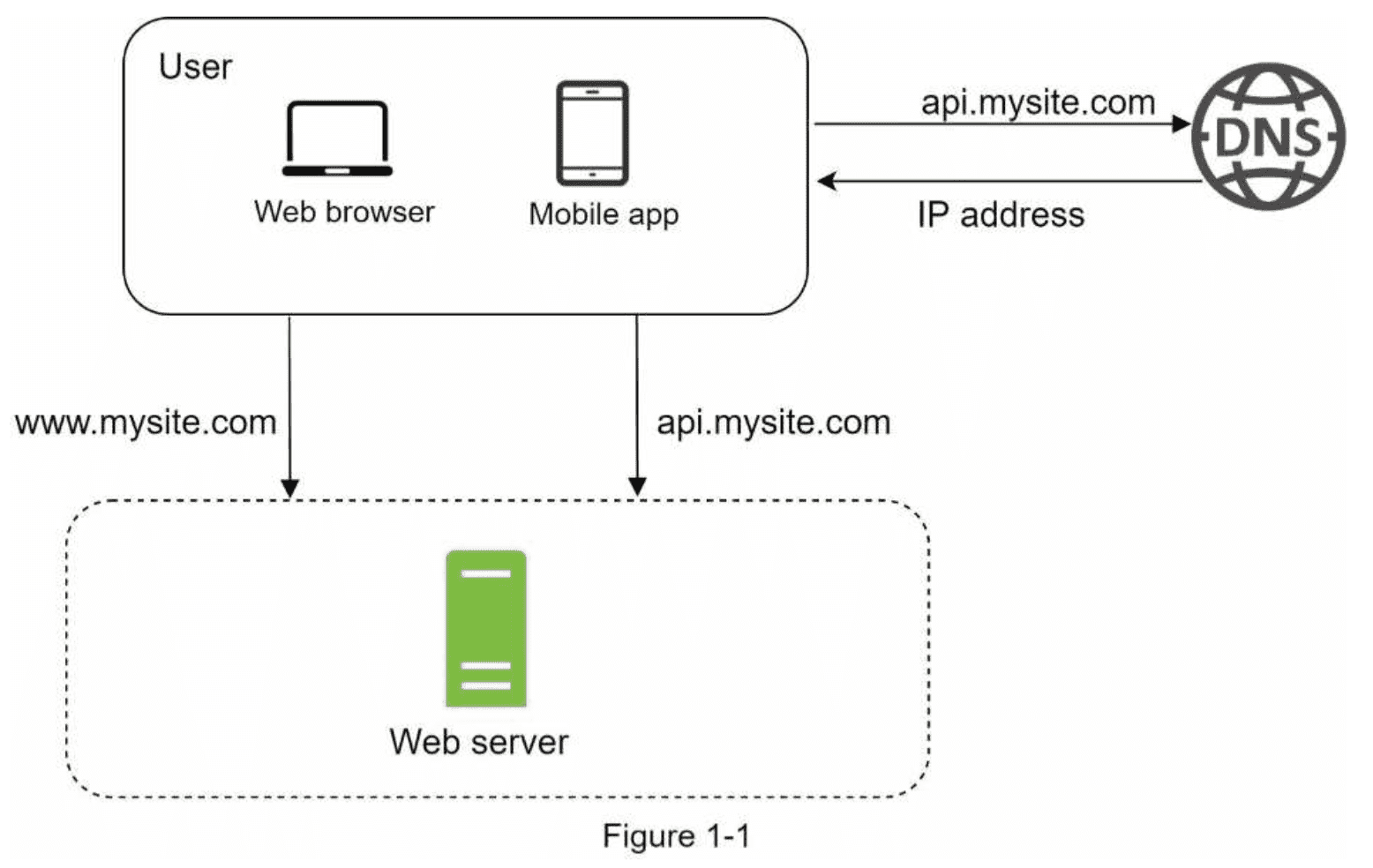

Basic vs Complex System

So, the most basic system we can get is quite simple. It has a client, it has a web server, and it’s accessible. It can look like this:

This is how probably most frontend developers view this setup

- We have a web or mobile app deployed on an IP address using DNS, using DNS to map it to human readable name

- We have a server deployed on an IP address, using DNS to map it to human readable name

- Frontend communicates with the backend using HTTP calls to its address

Now, this is a valid approach - I do the same for this blog page. Sure, there may be some magic by Netlify where it is deployed, but that’s about it. I didn’t create anything else.

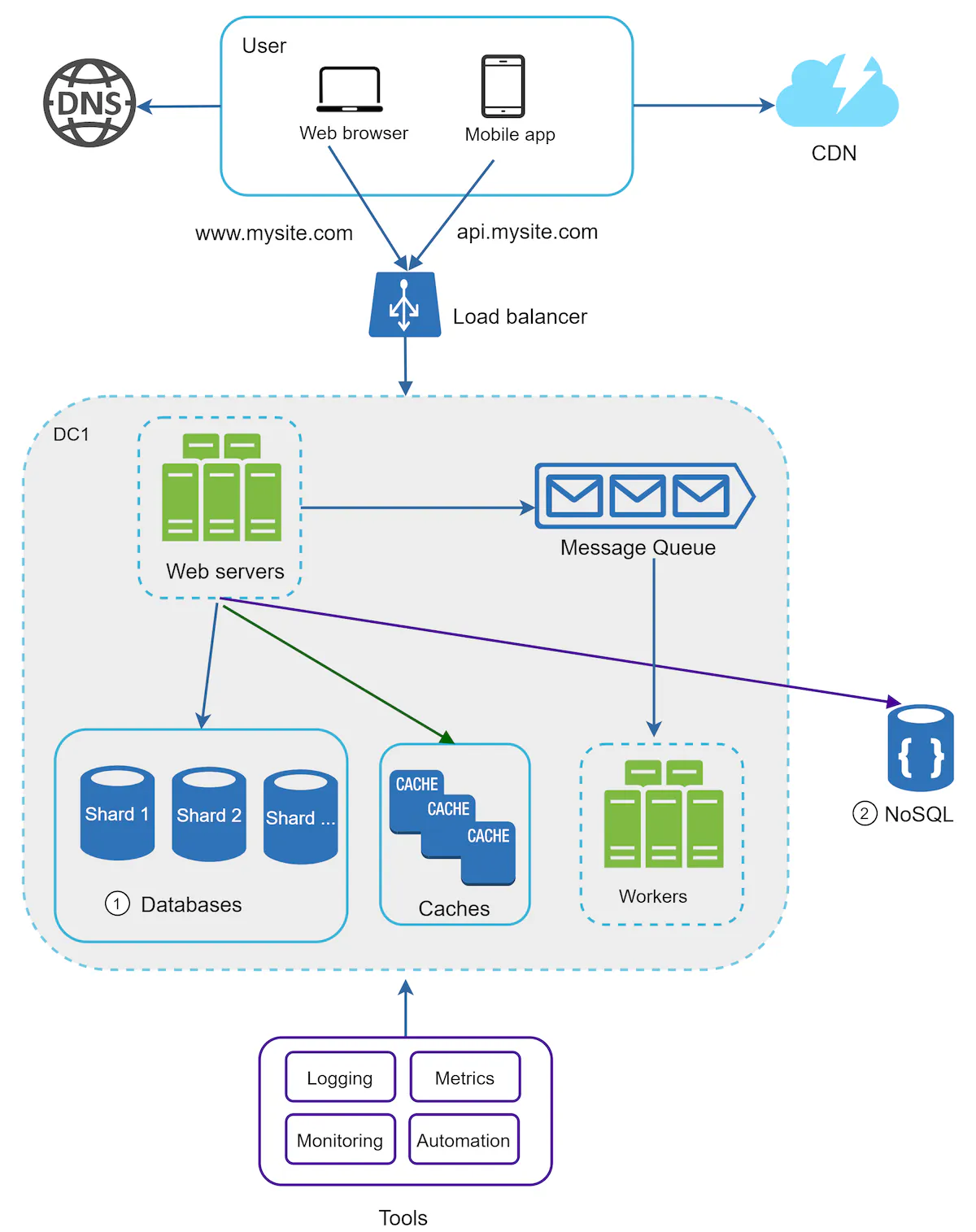

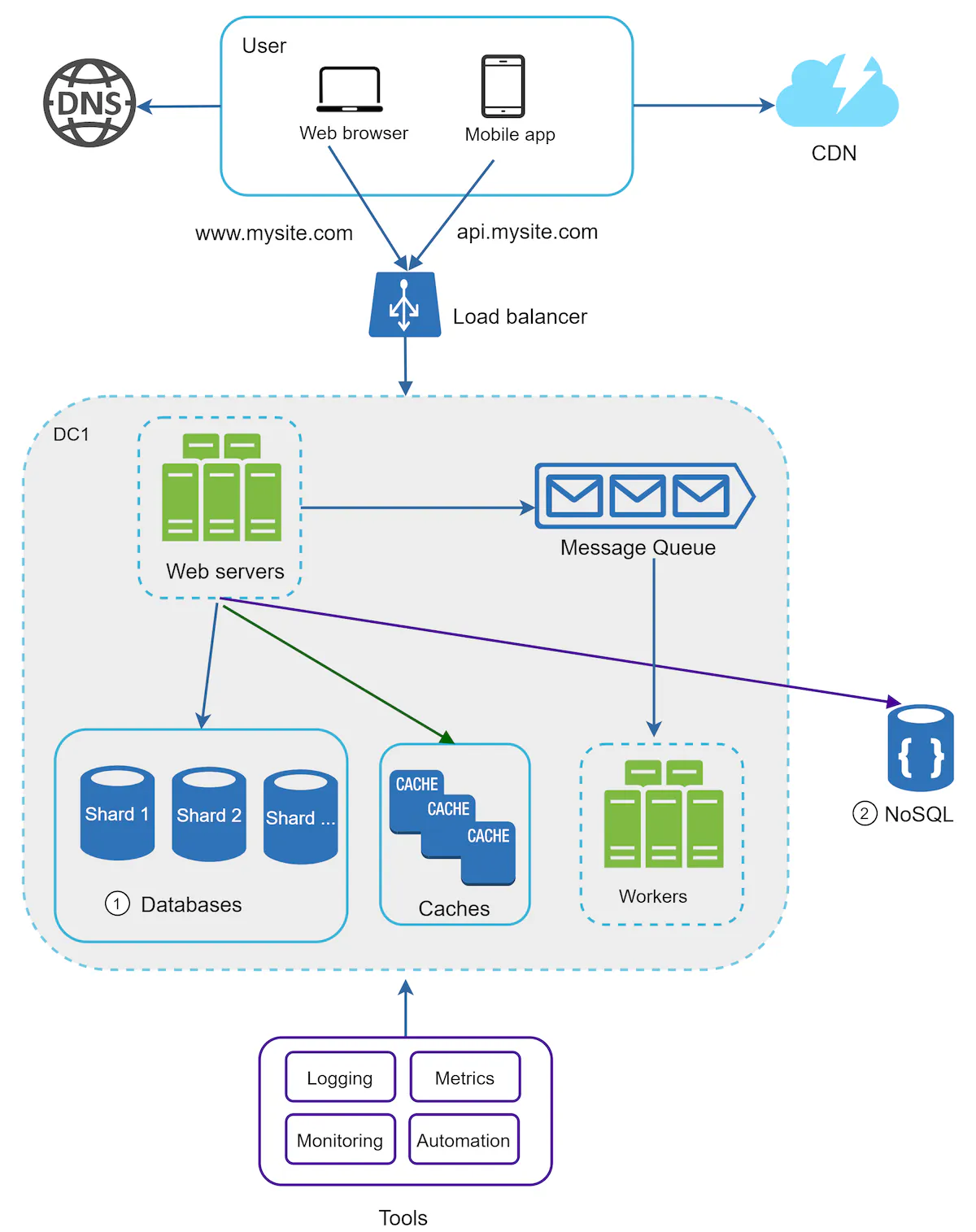

Now, let’s take a look at a complex system:

Well, now we’re talking! This is the last image from System Design Interview book in chapter Scale From Zero to Millions of Users. I’ve also previously said that frontend developers often view the setup differently. If you take a look at the design, it’s because the only thing that changed for the frontend is CDN for static content. Other than that, you still call the same endpoints - it’s just handled differently behind the url.

Alex Xu approached this in a way that additional bits and pieces on it. If I were to do the same, I could just copy the book contents. I’ll try to approach this from a different standpoint. This is A complex system design, not THE complex. It’s not the best for all complex software.

So, what I’m gonna do in the next parts is I’m gonna always come back to this image. I’ll try to specify a business case that would trigger the need for having the web server complex.

Intermezzo

Before I continue, I’d like to say one thing. I forgot it. Nevermind.

CDN - Content Delivery Network

Let’s start from the top. Content Delivery Network, or CDN for short, is a group of servers that serve content close to end users.

In simple words - If I’m in Czechia and log in to Facebook, I’m likely to get content from CDN. Imagine you have a server in the US and send data to Europe. The data would have to move thousands of kilometres. But, what if you could have a server in Europe? Then it’d be just hundreds.

That’s exactly what CDN is. Multiple servers that send content to the end user. If I’m in the US, I get data from US. If I’m in Europe, I get it from Europe. CDNs typically have multiple servers per continent (regions). If you have users in multiple regions, this is something you won’t be wrong about doing.

CDN is one of two things that if you want to know if you should use it, the answer is always an easy yes or no. If you have static content that can be cached:

- You have users in multiple regions -> You want a CDN

- You have users in single country -> You want a server in that country

Web Servers

Even though load balancer is second thing from the top, it’s impossible to talk about it before understanding web servers.

Web server is just a computer that has your code and runs it. Whenever you do an API call to Rick and Morty API, there will be some code like this:

app.get("/", async () => {

const data = await getDataFromDatabase();

return data;

})So, the data is stored in a database. And this code is called on a server. Imagine you have only one web server and 10 users:

- An HTTP call is done to the web server

- The code above is executed

- While the DB data are still being fetched (line 2), another request may already be processed by the web server

You can have 1 webserver to 10 DB calls simultaneously. The database and web server are separate (might even be on separate servers).

So, let’s imagine your server is a little more complex. Rather than just fetching data from database, it does a complex operation:

app.get("/", async () => {

performSomeOperationTakingTenSeconds();

const data = await getDataFromDatabase();

return data;

})If you have this code on the server, when 10 users make a call to the server, the server will:

- take the first request, starting to perform some operation taking 10 seconds

- take the second request, but the first request hasn’t been finished due to long operation. It will be put in queue

- repeat for all 10 requests

So, to serve 10 users, it might take 100 seconds to serve them all - or 10 seconds for first, 20 seconds for second, …

In come multiple servers:

- We can have the same code deployed on multiple servers

- By having 2 servers, we’ll reduce the time to 50 seconds, by having 10 servers, 10 seconds to handle all requests.

- Each call will be handled by the same endpoint, but not the same server.

Consideration: As you can see, having 2 servers instead of 1 makes it half, but having 10 servers makes it tenth. There are diminishing returns when adding servers, but there are costs to consider. You need to consider the pros and cons of having multiple servers (and the amount of them) for your specific business case.

Consideration: You can see terms used as “Vertical” and “Horizontal” scaling. This is an important thing to consider as well. If you have an operation that takes 10 seconds to process, you can make your single server more performant to handle it in 1 second.

You don’t need multiple servers to speed it up.

Consideration: Multiple servers allow for redundancy. If one server falls down, the others can take the load, although they may be slower.

Load Balancer

Now that we’ve established that we can have multiple servers and what their benefit is, what happens? Consider that we don’t do anything except get 10 servers:

- The frontend hits API

- The API performs some code on server

- What server?

That last point is important - what server? We have just created 10 servers. They exist. But most likely, only one is still being hit.

Here comes the load balancer into play. It’s a tool that “balances the load”. Or, in other words - if load balancer sees an incoming request, it’ll check if there are servers that are lesser used. If there are, it will route the request to the server that has the lowest load.

Load balancer is very closely tied to multiple servers. If you have just one server, there is nothing load balancer can do - there are no other servers it can route the requests to, so it will keep hitting the same one. However, if you have more, servers, you will have to use a load balancer.

Same as with CDN, the question “Do I want a load balancer” has a very straightforward answer

- You have multiple servers -> You need a load balancer

- You have one server -> Load balancer is useless

Refresher

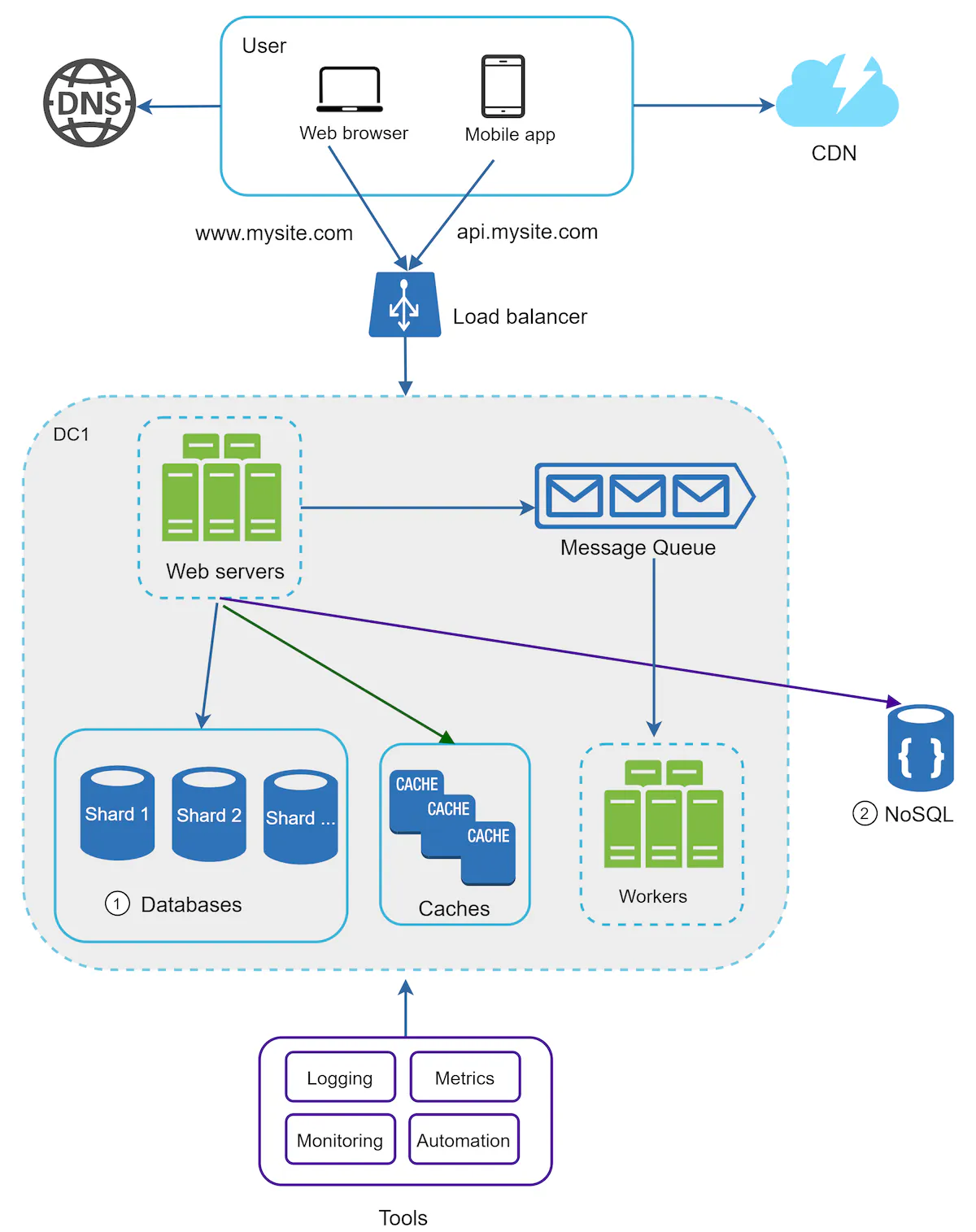

At this point, we’ve gone through CDN, load balancer and webservers. There are still a couple more categories to go through, so I’ve added the image below so you don’t need to scroll all the way up.

Databases

Databases are among the hardest things to consider when dealing with system design and there are quite a few approaches.

What DB to use

In general, there are 2 types of databases - relational, and non-relational.

Relational databases (or Relational Database Management Systems) are basically databases using SQL. Examples can be MySQL, PostgreSQL or Microsoft SQL Server. These databases have been around for a long time and have proven record of working reliably.

Non-relational databases are those that don’t store tables and rows, but rather collections of unstructured data. Webscale, DynamoDB, Cassandra fall into this category.

In a big system, you might be inclined to use both - SQL for structured data, and NoSQL for unstructured data or storing massive amounts of them.

Single Database

So, we’ve created a web application. We have a frontend, we have a server that defines the endpoints. Now, we need to store the data in case our server fails.

To do that, we’ll choose a database that suits our needs. We put it up and done - our server is able to write data to DB.

When the data is being read, it does so from the same database it writes to.

Now, let’s imagine that:

- We have a load balancer

- We have 10 servers

- We have a single database

We have 10 servers reading from the same database. Which is not a problem. What feels weird is that we are not consistent:

- We have 10 servers

- If one of them dies, others can take care of the job

- What if our database breaks?

Master/Slave Database

To prevent the problem and come up with redundancy, we can set up multiple databases. However, here we may run into a problem:

- Let’s consider that we have 2 databases.

- Each performs write operations

- How does one DB know about the updates of the other one?

In comes the master/slave concept. With this case, we’ll have 2 types of databases:

- Master Database - Write

- Slave Database - Read

By doing so, we will have only one database that performs the write operations, and the data from master DB is replicated to the slave databases. Most applications have way more reads to write (imagine how many facebook posts you read vs how many you create).

Now, if something happens to the master database, simply make on of the slave databases the master one (= allow writing into DB). Therefore, your availability stays high.

If something happens to all slave databases, the master database can still perform the read operations - although now it will be slower, it will still be available.

So, in short:

- Master / Slave databases allow for redundancy and higher availability and performance

- When there are problems with databases, reroute the traffic or make slave databases the master

- When using multiple databases, you need to replicate the data across them.

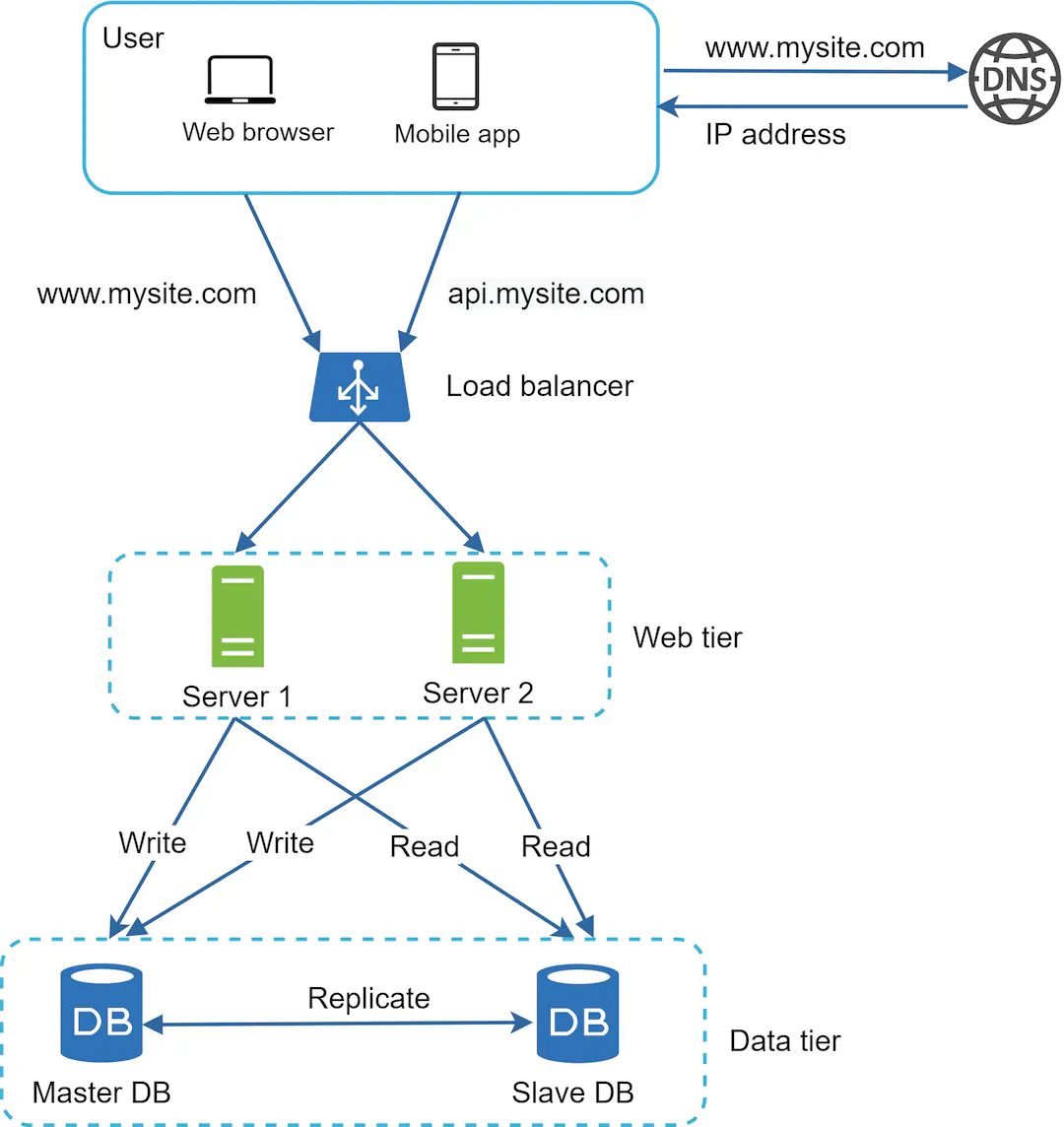

It may end up looking something like this:

Sharding

In the example we’ve started with, you can see Database Shards. So, let’s take a look at this fancy word.

Database sharding is nothing more but having multiple databases. Or multiple multiple databases. Because we’ve already had multiple with Master / Slave, but each shard can have its own Master / Slave approach.

So, what exactly is it? Well, imagine that you have 5 rows in your database

const databaseRecords = [

{ id: 1; name: "Simon" },

{ id: 2; name: "Shymon" },

{ id: 3; name: "Shymono" },

{ id: 4; name: "Simone" },

{ id: 5; name: "Siminion" },

]Now, you could store these records in one DB, or in multiple. You could have for example 5 databases, each holding 1 record.

Why is that good? Well, imagine that your business has tens of millions of rows. The data is so large that it’s getting slow to handle it.

So what you can do is instead of having one database system with tens of millions of rows, you can have tens of shards with millions of rows.

Every time your database data is called, you will retrieve it from a different shard. And, if set up properly, you will be fetching records from millions of rows, rather than multiples of it.

So how do you achieve that? Well, ignoring the specifics of setting it up, you need to know which shard to save to, and which shard to retrieve data from.

To do that, you’ll often see the term Shard key. This key is used to define to which DB you will save.

Consider that the Shard key is the first letter of the name. Now, with the example above, even if we had 20 shards, we would still save all the records to one.

There is no ideal shard key, but it is something that must be considered in order to get evenly sharded databases and we can use them to full.

Consideration: You can have your shards by location. In that way, you can also have shards closer to the users. Imagine that you have users from Paris and the US. You can have one shard in Paris and one in the US, and save data closer to the user, making it faster.

So, in short, shard is basically a database system that is part of a larger database system - that of the whole company.

Sharding is generally the hardest to design because:

- Uneven distribution of data (one shard contains more data than all the other shards due to bad

Shard key) - Costs (This means a lot of physical databases)

- Complexity (Developers are likely to manually manage the database. Furthermore, they don’t manage one, but many)

Database Summary

Databases seems like awful lot to take in. But, in short:

- Choose database that you feel will be good for your needs

- You can use either SQL or NoSQL, or both!

- Choose the database management for your needs

- Single database, Master/Slave database, sharding

- Consider the costs

- If you have single database, you will most likely have to scale up computing power. When you have multiple, you add additional servers.

- Consider redundancy

- With single database, you also have a single point of failure

Caching

Caching is a really simple concept. Consider the following code:

const data = {};

const getData = (id) => {

if (data[id]) return data[id];

const datum = getDatumFromDatabase(id);

return datum;

}This is one of the simplest form of caching. Whenever you call a database, the result is saved in memory. When the DB is queried again, you already will have the answer in memory and can return it right away.

Naturally, this can be dangerous. But it gives an idea of a simple cache. Now, what other cache options do we have in system design?

The first thing described is already cache. CDN is a cache. When it is fetched by the first user, it’s fetched with latest data. So, if an image hasn’t been fetched yet from the CDN, it is loaded into the CDN. The next time the resource is requested, it is returned from cache. You can actually see this if you’ll ever deploy on netlify. Whenever you push new code and load the page, it takes a little longer to load for you the first time you refresh it.

Similarly, you can cache the data you return from backend in the local storage. If it doesn’t change often, you can retrieve it from local storage without calling the server ever again after you loaded the page once.

So, what other caching options do we have except between backend and frontend? Well, let’s not view this as backend and frontend. Let’s view this as processes.

As mentioned before, a database can be a program running on some computer. It can be the same computer your backend is on. Or it can be a different one.

If it is the same computer, it is a process on a different port than your web server. So, your web server must request data from it.

Let’s take a look at the whole picture:

- A frontend is a process that runs on one computer

- cache between FE and BE (CDN)

- or: cache between process 1 and process 2 (CDN)

- A backend is a process that runs on another computer

- Database

See what I did there? We made a cache between 2 processes. We can do the same between backend and database!

There are a bunch of caches, for examples Redis or Memcached. These are also called “in memory storage” if you search for them.

What they basically do is that when you query a database, the result is saved into a cache (similarly with the JS code above). Whenever the DB is queried again, it is retrieved from the cache instead.

Consideration: Caches are faster, but they do not reflect the real state. If your data is modified often and your data needs to be up to date, it may not be the best choice

Note: We’re entering to a territory that we can cache between FE and BE, and BE and Database. These terms are getting outdated.

The reason for that is that we can have multiple backends (or services). Each of the services can have their own database, and the services can communicate with one another. You can cache between these as well.

Refresher

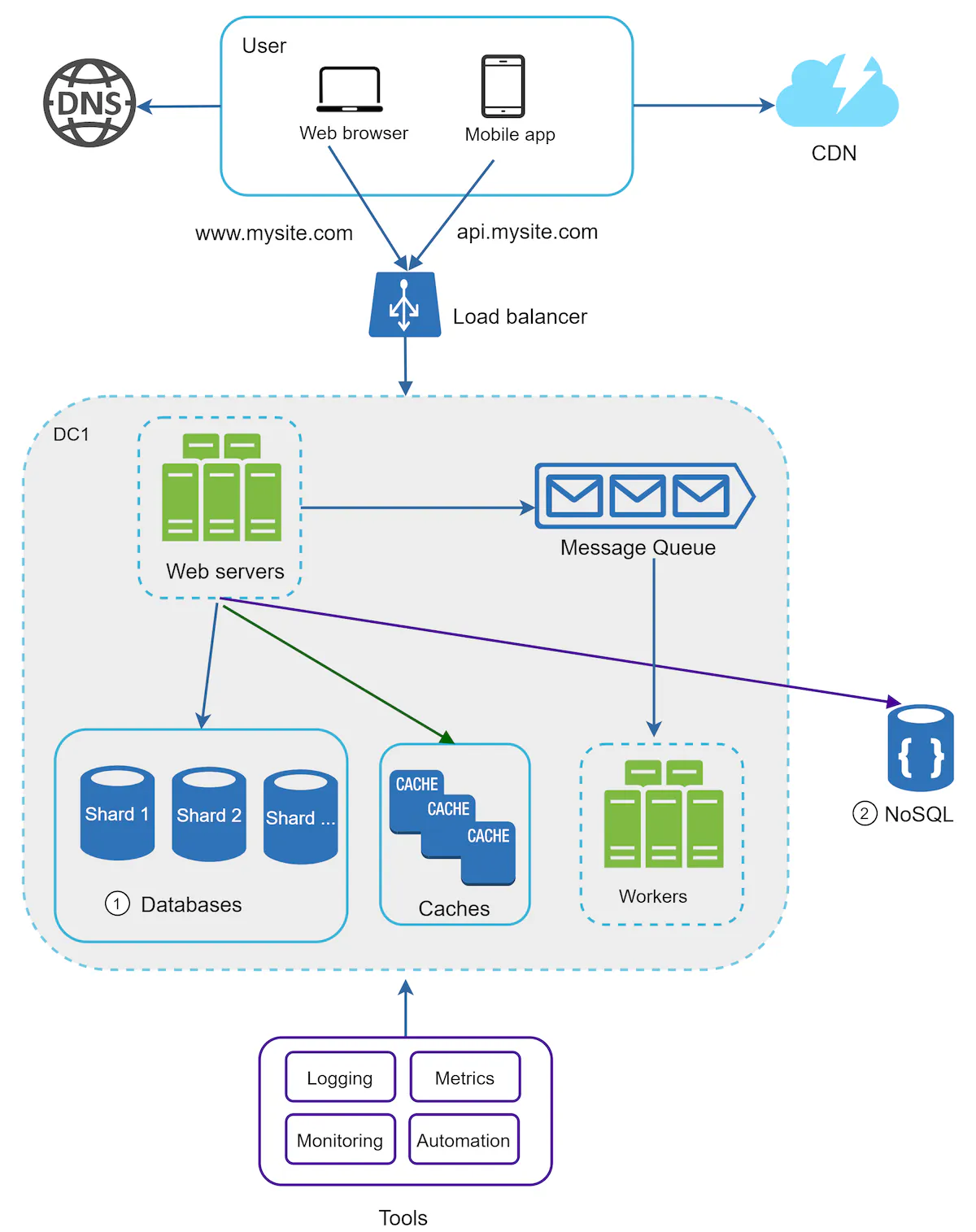

The last things that are left are message queue and workers. I’m adding the image below to see how far we’ve progressed.

Furthermore, I’d like to change/clarify the terms I’ve been using:

- Frontend -> Frontend (A process that runs on the user’s machine)

- Backend -> Backend for Frontend (A process that directly communicates with the frontend)

- Service (or Worker) -> Any additional service. This service can have it’s own database and can be completely separated

- Database -> Database (A process that writes and reads data)

Message Queue and Workers

We’re slowly getting to the end of this. The last two parts are Message Queue and Workers. Similarly to load balancer, message queue doesn’t make much sense without workers, so let’s deal with those first.

Workers

Workers are basically services having their own code, just like our backend. We could easily have the services as part of our BFF directly.

However, the difference is more of their usage. Imagine, if you will, that there’s a image processing that takes an hour to finish. Your BFF does way more than image processing, but it is one of the core features. This one feature could single handedly slow your entire business down. So, you will move it away from your system.

And that’s basically it. A Worker is a dedicated piece of code that is separated from your web server so that it can do its own thing while your backend is unaffected in terms of performance.

One thing to consider here is that a separate service can have:

- its own load balancer

- its own cache

- its own database

So, you can get into a point where you start designing a single worker just as much as you designed the original system.

Message Queue

I’ve mentioned with workers that they can have their own load balancer, cache and database. However, chances are you don’t want to retrieve

the data right away from the Worker when you request it because you know it takes time. So, what you do is:

- You put the things you want to get done in a queue in

BFF - You make this queue available to the

Worker - The

Workeris listening to the queue and performs operations on it

Here, different terms are used:

- The

BFFwould be calledProducerbecause itproducesthe request to perform something. - The

Workerwould be called theConsumerbecause itconsumesthe request fromproducerand acts upon it. - The middleware between these two (that takes from

Producerand makes it available toConsumer) is the MessageQueue

Now that it’s clear, let’s again reiterate on why this is a good concept:

- When there are many items in the queue, you can add more

Workersso that they deal with it faster - When there are no items, you can scale the workers back down because you don’t need them

- You can completely decouple the operations that take long time with your

BFF

Summary

Let me put the image here one last time:

During this lengthy post, we’ve gone from a simple single web server to multiple web servers with a lot of redundancy and cache and performance gains. These are most likely all the tools we will use in any system design task going further. We may use different algorithms, but we likely won’t go to additional tooling.

What we didn’t cover is additional tooling. There are many ways to approach this, but:

- Logging is basically information for developers to understand what the issue is and helps resolve it. Might be as simple as logging in the console.

- Monitoring is real-time view of what is currently happening on the server. This is important, because if we are alerted that our servers are 90% used, we may want to scale it up.

- Automation covers absolutely anything that we regularly do manually. This can be scaling of the running instances, running monitoring checks, but also deployments and CI/CD.

- Metrics are already in some form used in monitoring part, and they could be interchangable (RAM usage of servers for example). However, i used monitoring for system monitoring. In the case of metrics, I’m talking about business metrics - daily users, revenue, how long user spends using the tool, and more.

A short bullet point I’d like to end up with is:

- We have many tools at our disposal

- If we use them wrong, it may be very costly

- There is no catch-all tool to solve all our problems. It will always be a compromise.

If you’d like to still speed up your application after doing all these changes, you can still:

- Completely decouple a time-consuming task to a separate service

- Add load balancing, own DB, caching to the separate service

- Set up connection

We can keep decoupling until every part of our system is a separate computer. But at that point, it’d be very hard to manage (and probably very costly).

So, again - consider the pros and cons, and make a compromise of what is beneficial.

References