System Design 15 - Google Drive

Creating a cloud storage

Introduction

Welcome to the final part of System Design! In this last part, we’ll go and design a cloud storage!

Now, we’ve learned a lot through this system design journey. So, let’s see how we can apply it to creating google drive!

There’s quite a few cloud storages - Google Drive, Dropbox, OneDrive, iCloud. Almost every major IT player has one in store.

Overview of Google Drive

So, let’s take a quick look at how it works.

A “cloud drive” is basically a file system where one can store items. If you’d open your computer storage, you can see a tree-like structure:

- Folder1

- File1

- File2

- Folder2

- File3

- File4

Now, that’s one part of it. There’s also another part to it - synchronization. Basically, when I save files to my iCloud, I can access them from both mobile and web (and sometimes computer directly). So, let’s define the scope!

- Uploading, downloading, file synchronization and notifications

- Both mobile and web app

- Any file type can be uploaded

- Files must be encrypted

- Files are 10 GB or smaller

- 10M daily active users

Another thing to consider is high reliability. It’s simply not acceptable for files to be lost if they are saved there.

Finally, let’s do some Back of the Envelope estimations:

- Assume the number of signed up users is triple

- 10M DAU ~= 30M Signed up users

- Each users gets 10GB of space

- Assume 2 files per day uploaded with size of average 500kB (e.g. school documents)

- 1:1 read-write ratio (when have you last viewed your uploaded file…)

- Required space is 30M 10GB (signed up users allowed space) ~= 300 000 000 GB ~= 300 000 TB ~= 300 PB

- QPS - 10M * 2 uploads per day ~= 20M per day ~= 20 000 000 / 24 / 60 / 60 ~= 240 QPS

- Peak QPS = 2 * 240 = 480 QPS

Hold on

So, we have the requirements. The book at this point goes into a description of creating a single server and scaling it up.

I very much like the idea, but again, as was the case with other chapters, I’m gonna do it in my own words to better understand it.

So, let’s start from scratch. Consider that the above is where we want to get to. And let’s investigate the reasons WHY we want to get there.

Single Server Setup

Now, consider that at this point you are a startup. You have very little users. Your app is used by a couple hundreds tops. Furthermore, each user has less resources. Let’s start with the storage being 1 TB. So, let’s setup a quick API:

- Download file

- Upload file

- Get revision

Let’s consider our app is called simproch-storage.com. So, that’s where we serve the web. Now, there are 3 endpoints:

api.simproch-storage.com/upload-filePOSTrequest with attached file- Uploads a file with

multipart/form-data- see MDN file upload

api.simproch-storage.com/download-file- POST/GET request with

file-pathparam - For file download

- POST/GET request with

api.simproch-storage.com/get-revision- Retrieves revision of document stored

- We keep track of revisions to show old version, OR sync them

Now that we have our API, we’ll quickly setup a server, for example

- NestJS server

- Angular frontend

- MySQL database

Now, when we store a file, we generate a revision for this file, perhaps an UUID, or checksum of contents. The flow is like:

- Upload file in frontend

- Send it to NestJS server

- NestJS creates revision ID and saves to DB

- User can then retrieve the file via UI by specifying the path, which is unique for users

- Or by ID element

We also define it on HTTPS so the communication is secure.

Finally, we have all of this on a web server. But soon, we find out something disturbing! We’re running out of space because users started uploading too much, or because we’ve gotten a spike in traffic since our business is more popular!

So, what do we do? Well, we continue by creating more webservers. Now, we have multiple webservers, but we still don’t have any data in there! So, we come up with a brilliant approach. We will shard the database.

- We have a user ID

- We’ll convert it to a numeric representation and modulo it by 4.

- We’ll also have 4 servers. If the result of modulo operation is 0, we’ll save the data for that user to server 0.

- We do the same for next 3 servers

Great! We’ve accomplished something. But our business go bigger very fast, and now we have perhaps 4 TB of data for users available.

Even though we’ve put out the fire, we don’t want to run into this stressful situation again. So, we start thinking:

- What if one of the servers is unavailable? The user data are probably lost

- What if we get more users from different regions? This happened once, we have a chance to become a bigger business

Slowly, the What If scenarios pile up, and we decide to investigate. We soon find out about multiple cloud technologies allowing for storage.

One such technology is Amazon S3 Object Storage. We see that we can store any files in here. Furthermore, AWS takes the regional problems from us - we just need to configure it

- We can easily replicate files in the same region into multiple buckets

- We can replicate files in the same region across different regions

So, we start using this. Instead of fetching and saving the files to our own DB, we’ll save it to a different provider.

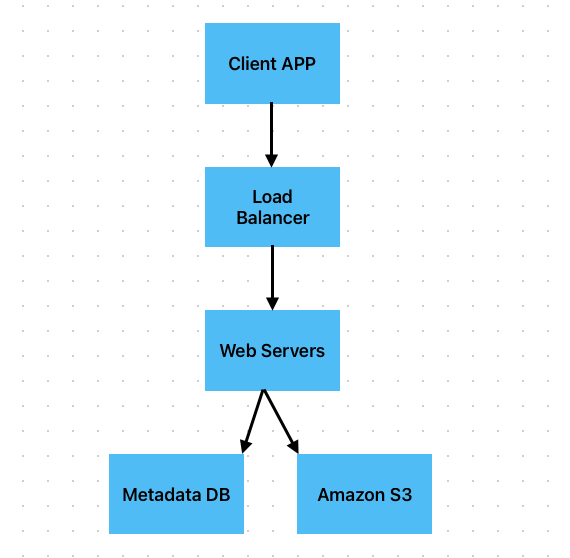

Now, since we’ve scaled this part, it makes sense to try scaling other parts as well:

- We now have multiple databases, but still just one server processing the requests. We decide to add load balancer so we can add/remove servers at will depending on traffic

- We need to keep track of the things we’ve saved to Amazon S3, so, we’ll still have our own database describing the metadata

- To avoid single point of failure on our hardware, we move it out of the servers as well

Now, we’ve gotten to a fairly solid high level design!

Now that’s done, we finally have a solid base. So, where do we go now?

Well, we may need to sync conflicts. Consider that there are multiple users that have access to same document. What if both of them update the file at the same time? This is where the revision comes in:

We’ll basically do the same as with git conflicts.

- The first revision to come will be the main file

- The second revision that is diverged will be saved as well, but users can apply those changes - or at least see them

What would finally happen be:

- file

bunny.docxbeing the main file - another file in same folder

bunny__revision__123123__2023-25-11.docxshowing the user that we didn’t manage to save a single file, but they can work with it and resolve conflicts themselves

Now, if we look at the system design back up, we’ll notice one thing we’ve encountered before. When uploading a file, we first upload it to our server, and then to S3 storage. Again, let’s use presigned URL

That way, we’ll separate the design a little:

- When uploading a file, it is first uploaded to the cloud storage, only later saved to our metadata DB

- Revisions are still in our metadata DB

- What to retrieve goes to our server, after which we return presigned URL to download from AWS directly

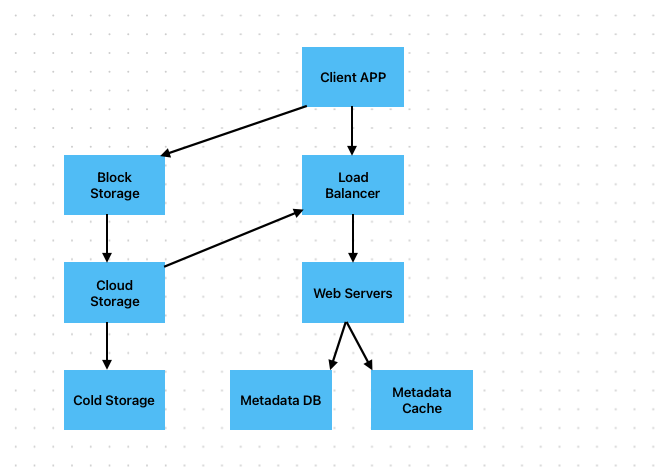

It will look something like this:

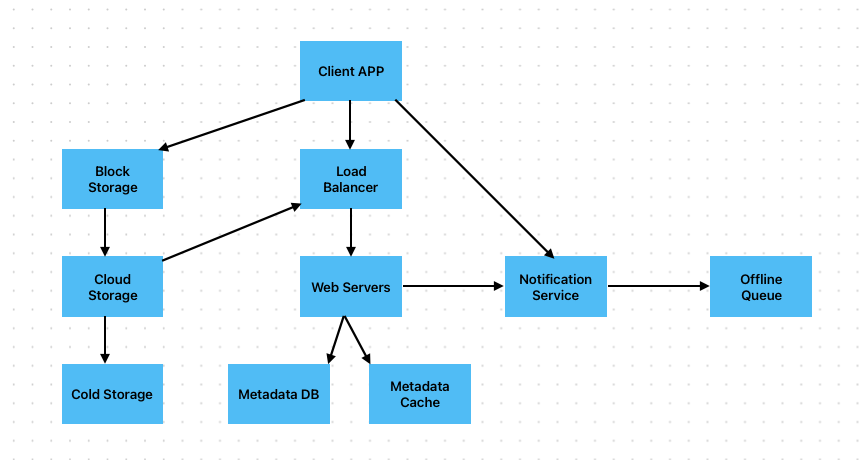

Now, we can see that the biggest change is:

- We’ve added metadata cache

- We upload the files differently

Now, I mentioned presigned URL. And we can use that. However, we could also use new technologies.

In YouTube section, I’ve mentioned Group of Pictures, essentially splitting a video into many chunks. Block Level Storage is essentially the same thing, except we separate it into various blocks. This allows for parallelization and higher performance.

The blocks by themselves make no sense, just as GOPs. Only when a file storage above them merges the blocks together are they useful.

And that’s pretty much it! We’re missing two things:

- Notification service. A client may want to be notified of changes to his files. We’ll use long polling for that.

- Offline backup. If something has changed while the user was offline, we want to make it known to him. When a file is updated, the next time user logs in, he’ll be notified there are changes to be pulled from remote.

Great! We’ve quickly moved from a very startupy system that was sufficient for our needs back then, but because company was performing good, we had to scale it up!

This is the final high level design

Deep Dive

Now, let’s move a little deeper. Let’s review the requirements again:

- Uploading, downloading, file synchronization and notifications

- Both mobile and web app

- Any file type can be uploaded

- Files must be encrypted

- Files are 10 GB or smaller

- 10M daily active users

We’ve already added upload, download, file sync, and notifications

- Upload to S3

- Download through S3 but metadata first through our web servers

- File synchronization achieved via conflicts resolution and offline queue

- Notification in place

Now, let’s discuss some deep dive parts.

We want to deep dive into upload and download, because these are the main features. However, to deep dive in them, we first need a couple more words about block storage.

So, we’ll deep dive into:

- Block Storage

- Metadata DB

- Upload

- Download

Block Storage

So, we’ve discussed block storage as a way to parallelize the flow. However, it’s so much more than that.

While with videos, we’ve split it into groups mainly for processing the video, and there are streaming protocols in place that deal for downloading it for us, we can apply similar pattern in here!

Imagine the following scenario:

- You are working on a budget on next year with 5 different people

- You are using excel sheet with 12 tabs, one for each month.

- You’ve already finalized first 11 months and are working only on the twelvth

- Why would you pull changes from the first 11 months every time if you’re not updating them?

Now, with block storage, it’s a little more complicated. We have blocks of data limited by size. That being said, we need to order them properly to show changes. And while we probably don’t have 12 blocks - one per tab - in excel, it gives a good idea what we do.

This is called Delta Sync. Basically, by splitting the upload and download into blocks, we can only update parts that need complete fetching. This significantly reduces bandwidth requirements and can lead to fetching file from an hour to minutes or seconds.

Another thing we’ll do is compression. We’ve already tackled video processing with compression algorithms before. But we don’t compress just videos. We can also compress images or text (e.g. gzip). For more about lossless compression, see Wikipedia.

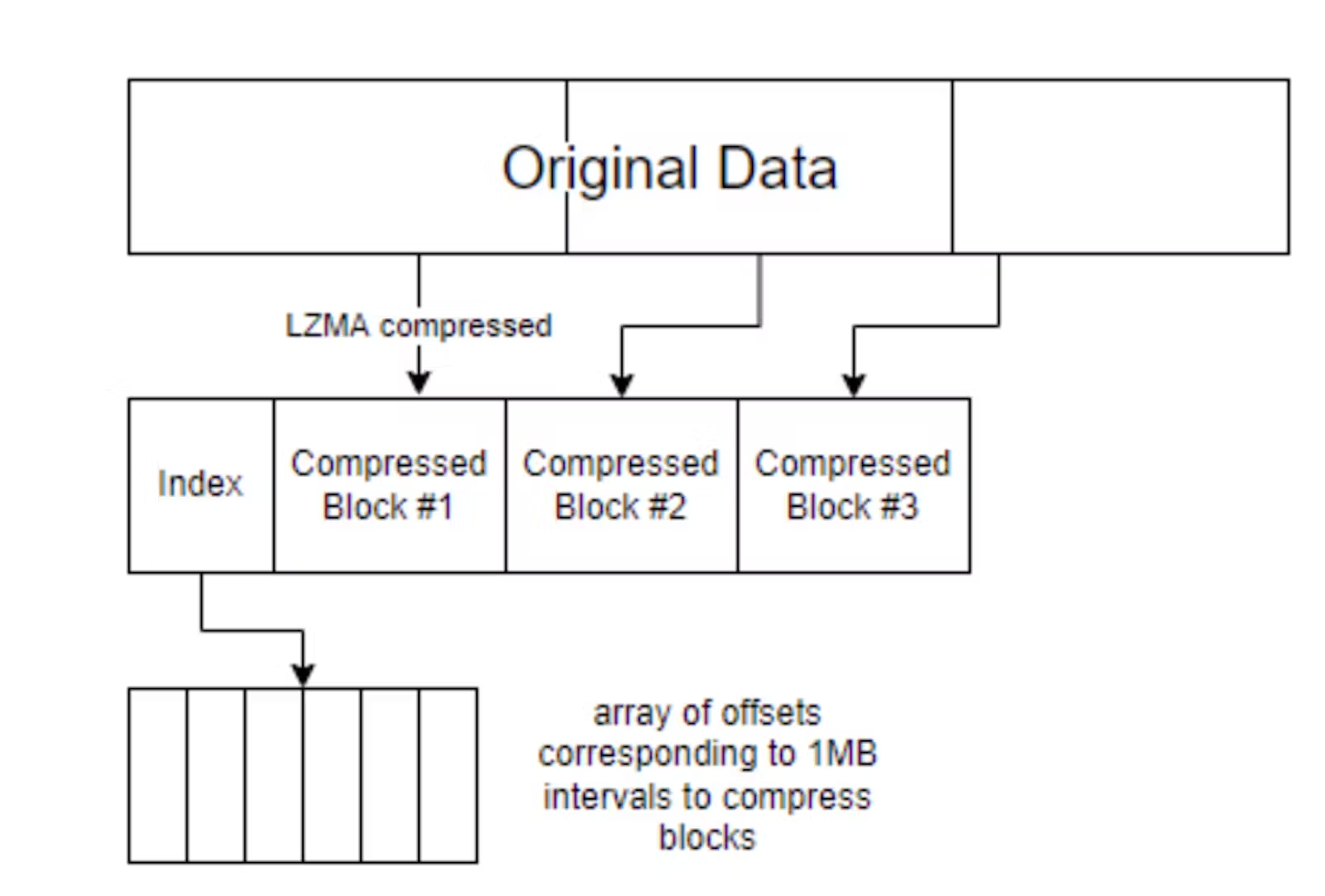

So, what we’ll essentially do is:

- We split the original data into blocks

- We compress the individual blocks with a desired algorithm

- We store these blocks

The image above shows how compressed blocks are created and stored. And while the article uses Lempev-Ziv-Markov chain Algorithm (LZMA), we can compress the blocks with desired algorithm whether it is an image, video or other possibilities.

Now, if files are changed, we’d compress the blocks. We’d then compare the blocks with stored ones to see which changed. And those that changed would be the only ones uploaded (or downloaded).

Metadata DB

One thing to bear in mind is consistency. It is not desirable to show to different clients different views (unless it’s being updated by both simultaneously).

A way to deal with this is making sure we invalidate the cache whenever a new block is received, so that next data load will return real data.

SQL databases follow atomicity, consistency, isolation and durability, or ACID for short. This enables high consistency and reliability. However, NoSQL databases don’t have it by design, and therefore we might need to do that ourselves if we opt to use NoSQL DB.

I’ve mentioned that we’re storing the blocks. While individual blocks are sent to S3 for faster speed, we need to be able to track it.

Furthermore, we store:

- Users

- Workspaces (multiple people, same files)

- Devices (notifications)

- Files

- File revisions

To view the relations:

- A user is in a workspace.

- A workspace contains N files for users to collaborate on

- A file is described by file version

- A file version comprises of N blocks in specific order

- Finally, for notifications, user has N devices. A device can be linked to a single revision for synchronization

There will likely be more relations, but this is sufficient for high level.

Upload Flow

Now, let’s see what happens when a user starts uploading a file. First, consider the file does not exist:

- File upload starts

- Store file metadata -

uploadStatusispending - User uploads file to block servers

- Block servers chunk the files into blocks

- Blocks are compressed and encrypted, then uploaded to cloud storage

- Once uploaded, request API servers to update metadata

uploadStatusisuploaded- Notify client that his file was uploaded

- Store file metadata -

Now, if it’s an existing file already:

- File upload is the same as previously, however

- Other users sharing the file in workspace are notified

- Both upload pending and upload completed

- After chunks are created, we compare them to our saved chunks so that we don’t waste bandwidth

- Other users sharing the file in workspace are notified

Download Flow

The download is triggered when a file is added or edited somewhere. There are 2 ways a user downloads a file:

- Notification from upload flow

- A client is offline. On next login, he’ll receive latest changes

Now, once a client knows there are new files, it will simpluy request the metadata, and then download blocks to construct the file.

- Notification service informs client that a file is changed

- Client fetches new metadata

- API servers return metadata

- Client requests block servers to download blocks

- Storage returns blocks

- Client downloads blocks to reconstruct the file

Notifications

The notifications serve 2 purposes

- Consistency - users are aware there are changes in progress and should not push new changes

- Information to download latest changes, both offline and online users

As for the downloading of latest changes, we have 2 options. I’ve already mentioned long polling in the high level design.

Now, in chat service, I’ve mentioned that we have 3 options

- Short polling, never to be used

- Long polling, sometimes to be used

- Websockets

The last 2 options work well. However, websockets are better suited for real-time communication, such as chats, and for bidirectional communication.

Here, it’s one directional - we are notified once about the changes, and we immediately pull them. Therefore, long polling is the way to go.

But even then, websockets would work. That’s something to keep in mind. It might be suited for real-time document updates, such as those on google docs.

Storage Space

Now, with storage space, we may be quite constrainted.

- To support file revisions, we store multiple versions of same file

- Frequent backups can consume a lot of space

Therefore, we may want to make some improvements:

- Remove duplicate blocks

- Consider the excel example before.

- With Tab 1 was most likely untouched for quite some time, while Tab 12 is frequently updated

- We’re likely storing many duplicate blocks of Tab 1 whenever each other tab was updated

- We don’t need to keep them stored, only in metadata

- Intelligent backup strategy

- A limit for the number of revisions to be stored

- Remove older versions

- Keep valuable revisions only

- If a file was edited 1000 times because of typos, we probably don’t need to keep track of all of them

- Add weight to recent versions

- Might need some analytics

- Less used data to be kept in cold storage

- Not all data need to be fetched fast

- Very old revisions are unlikely to be touched

- For example, Amazon S3 Glacier is way cheaper

Error handling

As is always the case with these applications, we want to have solid error handling

- Allow gracefully solved issues.

- If a single server fails, request should be redirected

- If load balancer fails, other one will pick it up

- Cache is replicated multiple times, if data can’t be retrieved from expected one, retrieve from another

- Write DB is down - promote slave to master

- Read DB is down - pick up by another slave

- Notifications can be handled by multiple servers as well

Summary

In this part, we’ve investigated google drive a little. Basically, we’ve:

- Spread files into smaller chunks for faster performance

- Optimized storage by compressing individual blocks of data

- Encrypted the blocks of data to allow for better security

- Dealt with file sync through revisions and conflicts

- Kept file metadata so we are able to fetch data from storage servers

- Added notifications via long polling

References