System Design 12 - Web Crawler

Building web archive

Introduction

This time we’re building a web crawler. Now, what exactly is a web crawler?

Sometimes called robot or spider, it’s basically a program that goes through a web page and saves it for later use.

There are multiple use cases of web crawler

- Wayback Machine, allowing users to visit old versions of web pages

- Search Engine Indexing, allowing creating a local copy of web page

- Data Mining, allowing to create tools like ChatGPT

- Monitoring of copyright infringement, allowing to enforce law

So, it’s a very useful tool. Now, how would we design one?

Well, in short, it’s fairly simple:

- Get a list of URLs to crawl

- Go through the URLs, download them, go through them

- Save them

- Over time, go over already saved URLs to see changes

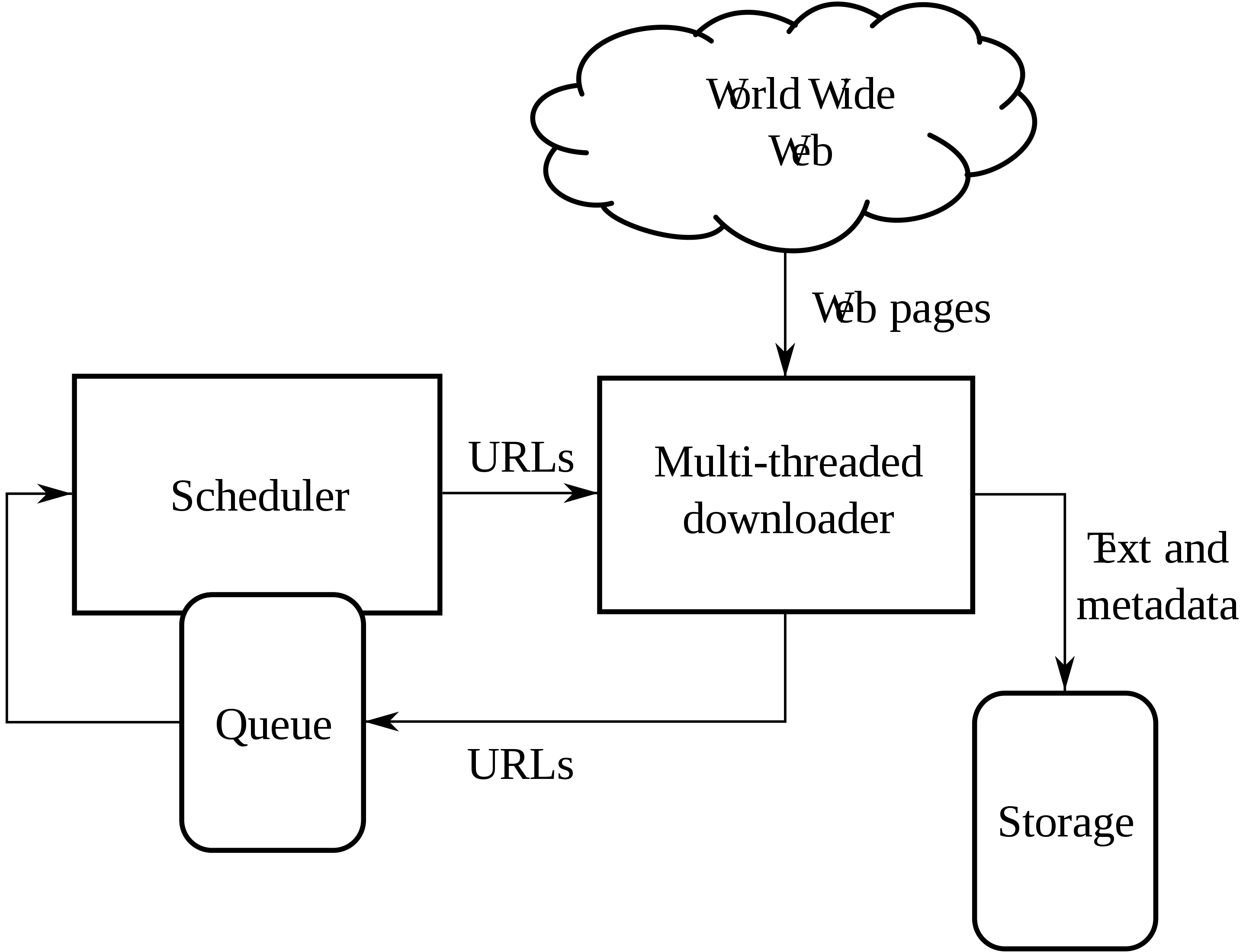

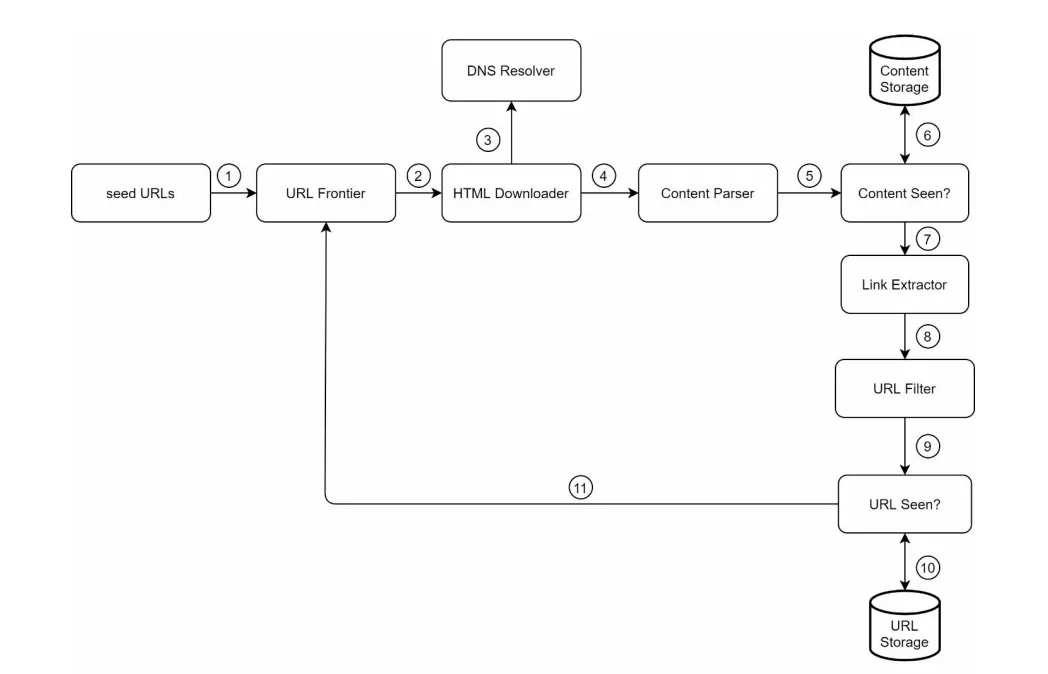

A basic architecture can be seen below

Now, it seems fairly simple at first glance. If I were to go through URLs of my university, it’d be fairly easy and fast. But the problem comes with scalability. So, let’s set some rules:

- Our tool is used for search engine indexing

- We collect 1 billion pages monthly

- We should consider both newly added pages, and also edited (already crawled)

- We want to store the pages for 5 years

- Duplicate content should be ignored

So, looking at the above requirements, we need to:

- Scale the system well - a billion pages monthly is a lot. We need to allow good processing

- Storage must be sufficient to store that many pages

- We can’t make too many requests so we don’t run into blacklisting or rate limiting

Back of the envelope

- 1 billions web pages monthly ~= 1 000 000 000 / 30 ~= 33 million pages daily ~= 1.5 million pages per hour

- 1.5 million pages per hour / 3600 ~= 400 pages per Second

- QPS ~= 400 pages per second

- Peak QPS ~= 800 pages per second

- Storage: Website being ~500 kb => 1 billion * 500 kb ~= 500 billion kb ~= 500 trillion b ~= 500 TB per month

- Storage for 5 years = 500 TB 12 5 ~= 30PB for 5 years

High-level design

So, we’ve already had a basic web crawler architecture. But we need to have our one quite scaled.

We’re gonna crawl a lot of web pages. To achieve that, we’ll need a couple of items:

- We know we need to download URLs

- We know we need to filter some content out (duplicates)

- We know we want to store it

- We know we want to check them multiple times

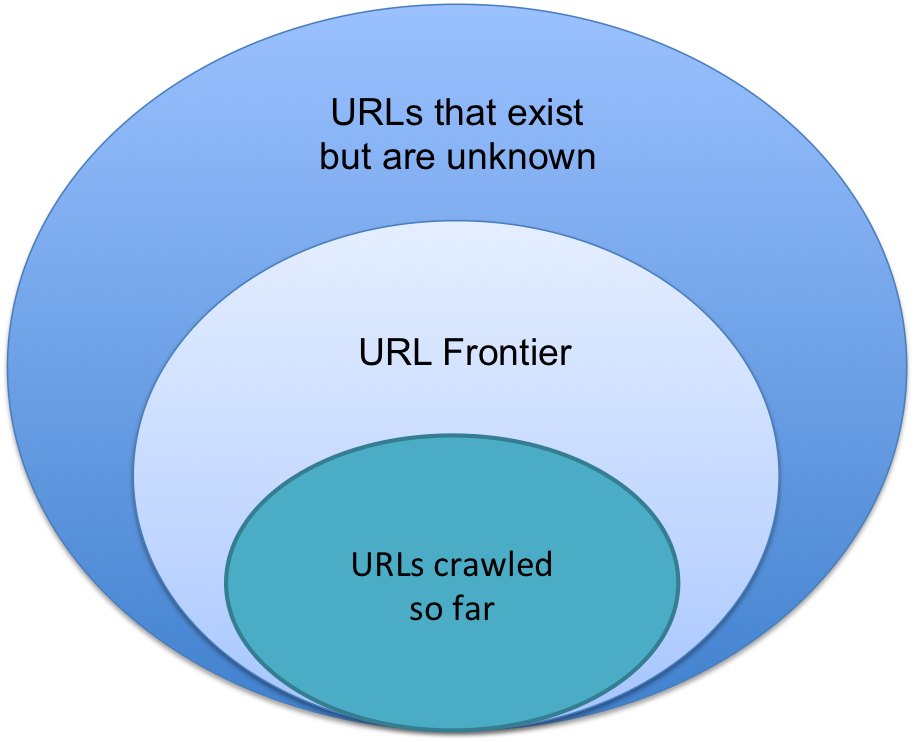

So, one of the things we need to do is get a list of URLs to crawl. We can see that as a so-called URL Frontier:

So, here we can basically split our system into 3 components now:

- One where we don’t know the URLs yet but they exist (seed URL)

- One where we know them and they are yet to be crawled (URL Frontier)

- Ones that we’ve crawled (Downloaded, filtered, stored)

A seed URL is basically a root URL from where we begin crawling. This could be about.google.com or google.com - anything where we plan to go further.

Once we went through the links in seed URL, we then insert them into URL Frontier.

Effectively, the main difference is:

- on seed URL, we go through all links on web and pass them forward

- on URL Frontier, we actually go through the pages

So, that’s the first two parts. What happens next?

Well, we need to download the HTML. There are many popular tools to do that, but this will likely be slow. Furthermore, we need a DNS resolver to be able to

match wikipedia.org to it’s IP address at the current time, because DNS of websites can change over time, but their name won’t, and we need DNS to download it.

After that, we’ll perform the filtering of duplicate web pages. For example, we can create a hash of the entire web page and see if it already exists. If not, we’ll process it. If it exists, there’s no need to keep this in our storage.

And finally, we can filter out some unwanted pages, such as blacklisted pages or unsupported content types/file extensions.

After all of this, we could save it to our storage. BUT! There are actually more steps!

- Imagine you’re crawling a lot of stuff, before you realize that the webpage doesn’t make sense because they are malformed. Therefore, before starting the process of filtering and duplicating, we’ll first parse whether the page is okay

- The paths might be broken. We need to extract the correct URLs - for example, in the below image, the path is

/images/system-design. That means absolute path relative to root of the page. So, if we’d try to download this, we need to build the full URL.

So finally, the design is:

- seed URLs to start the process

- URL Frontier contains URLs to be processed by crawler

- HTML Downloader takes the page, maps it to DNS, downloads it

- Content Parser parses the page to see if it’s worth checking it and it’s not malformed

- Content Seen validates whether we already have the page using hash of the page (or a checksum, if you will)

- If the content is valid and we want to keep track of it, we store it to disk (popular content such as wikipedia might be in memory)

- We perform some URL extractions to combine links relative to their parent

- We filter out blacklisted URLs or content types we do not want

- We also confirm that it’s a URL a URL we haven’t yet seen. If it is, we want to crawl it again at a later point -> we add it to the frontier

- We finally store the visited URLs

And that’s our high level design!

Deep-dive

In this part, we’ll go through some deeper knowledge. One of them will again be algorithms.

What we’ll discuss is:

- Traversing the pages

- URL Frontier

- HTML Downloader

- Robustness

- Extensibility

- Problematic Content

Traversing

Traversing is the algorithmic part. If you’d open developer tools, you’d see that it’s actually a tree (or a graph). The difference is out of scope of this post.

There are a bunch of algorithms to do that. The two I’ll mention are Depth-First Search (DFS) and Breadth-First Search (BFS)

Consider the following HTML code:

<div>

<div id="first">

<div id="first-1"></div>

<div id="first-2"></div>

<div id="first-3"></div>

<div id="second"></div>

<div id="third"></div>

</div>DFS is basically going deep first, as the name implies. What it means is with the above code, DFS process it as follows:

- root -> first -> first-1 -> first-2 -> first-3 -> second -> third

BFS is very similar to it, traversing it on “levels”

- root -> first -> second -> third -> first-1 -> first-2 -> first-3

Now, each have their use cases:

- DFS would go very deep very fast, and can be used when some pages have priority over others

- BFS is often the way to go

Either way, we’re traversing a lot of stuff in short time. We need to be careful to not flood the page with requests.

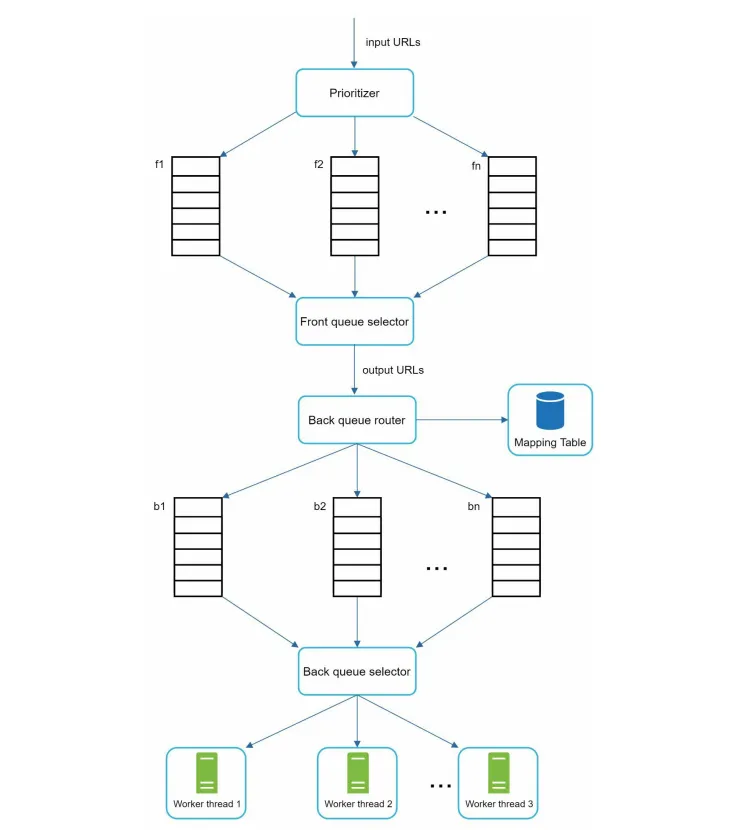

URL Frontier

As mentioned, we need to address flooding the page. URL frontier helps with that. This component contains URLs that are yet to be crawled.

In here, we can effectively slow processing of some pages down. This is also called politeness

What we would use here is basically a queue. We’d add a delay between individual downloads of pages. Imagine the following:

- We retrieve 20 links from wikipedia

- We process them all in a single queue, adding a second timeout before individual entries

- We wouldn’t flood the host

Similarly, we want to give different priorities from pages - for example, a forum post on apple has (probably) less priority than root apple page. Therefore, in URL frontier, we’d basically have 2 components:

- Prioritization, who takes URLs of specific seed as input, and returns prioritized URLs based on our bias

- Politeness, who takes a list of prioritized URLs and puts them into a queue, allowing for slower processing so we don’t flood the root

The final component could ook like:

Finally, we’d need to make sure the pages are fresh. So, finally, once we’d crawl the pages, we want to recrawl them again. A blog post from a year back is unlikely to have new content. A main company profile is. So, we’d prioritize here as well.

Now the storage part is tricky - we want to keep the URLs on the disk, but getting them all from disk always is slow. So, we’ll have a hybrid approach

- URLs soon to be taken are kept in memory so they are available

- Once they are processed, they are removed from memory

- New URLs to be processed are enqueued so they are in memory again

HTML Downloader

The HTML downloader downloads the page. There’s an important nuance here - robots.txt.

robots.txt file is available on many pages that allows or disallows specific content to be crawled. You can find one on Amazon

These could be cached so we don’t need to download them all the time. When all domain has been crawled, it can be removed from memory. This allows better memory.

Another performance optimizations we can do is having distributed crawls.

- We’ll have multiple downloaders so that we can process multiple pages in parallel

- We could have servers geographically so that we download page from US faster with US server than with EU server

- Some pages might be intentionally slow, so we could set up a time limit for response, otherwise discard it

- Caching DNS. It can change, but we can completely separate it by keeping our cache of last DNS to web alias which can be updated out of the system

Robustness

As is always the case, we want to have as robust system as we want. Other than performance, we need to care about data integrity and others:

- Exception handling - Make sure the system is not broken when a single page can’t be processed, allow for seamless recovery.

- Saving crawl states and data (yet to start, in progress, finished). Important piece of monitoring and allows for restarting specific crawls

- Consistent hashing to distribute load among downloaders

Extensibility

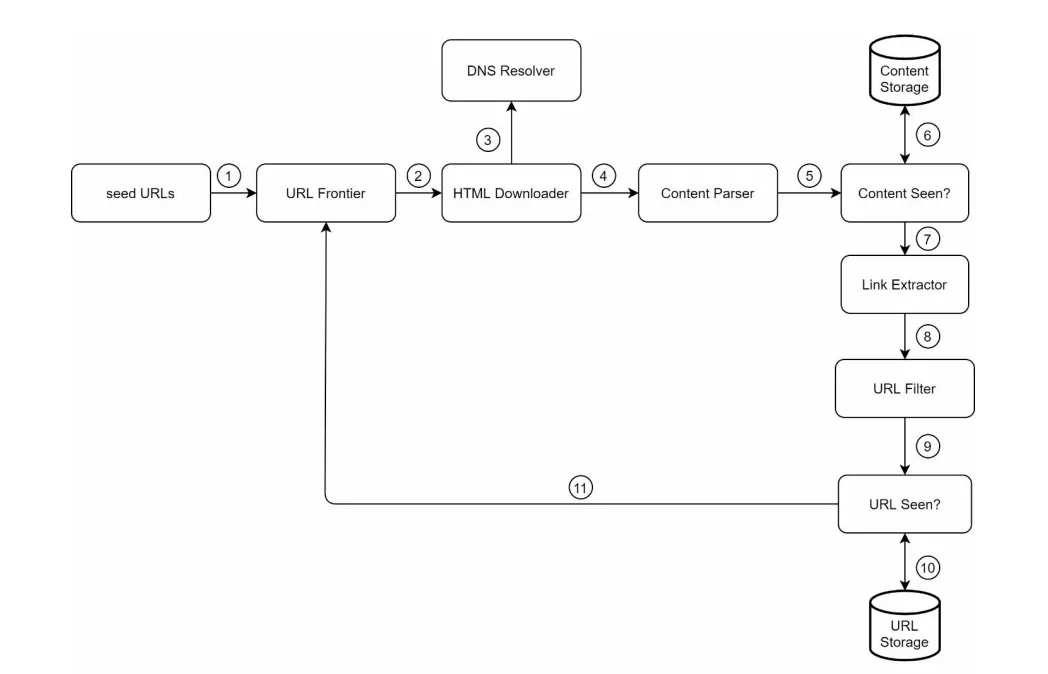

For extensibility, consider again the original design:

Now, imagine that we have decided to download all pictures from here. Where would we do that? Well, probably after downloading the content.

All systems need to be flexible, and crawler can be extended by supporting new content types and downloading specific content. Our system is extensible as of now, but with some new changes it might not be. This was just a test to see if we can extend it - and we can.

Problematic Content

Now, I’ve mentioned problematic content before. In this case, I’m talking more about problems for the crawler:

- A lot of pages are duplicates. As mentioned before, we can filter them to allow for better performance

- There are contents with little to no value, such as advertisements spread in multiple (and sometimes empty) tags to allow blocking ad-blocker. We can filter this out as well.

Finally, there are some pages that are called Spider traps. These could cause serious harm.

To give an idea, example.com is a page that allows anything in URL. So, we could potentially have something like example.com/foo/bar/foo/bar/foo/bar up to

maximum length. And each of these could render the same page - with only link being to another page.

These are called spider traps because there is no content and your crawler is still processing it, making it slow with no value.

We can do many things:

- Manually verify page is problematic and blacklist it

- Custom filters, such as maximum depth, etc.

Summary

So, here we discussed a web crawler. Hopefully that was fruitful and we’ve learned something new.

Keep in mind that there can be quite a few problems again:

- Horizontal scaling, database replication, availability and consistency

- Analytics

- Filtering out unwanted pages with low quality

- Server side rendered webs might need a different approach due to dynamic content in HTML

References